Stephen Senn

Head of Competence Center for Methodology and Statistics (CCMS)

Luxembourg Institute of Health

Double Jeopardy?: Judge Jeffreys Upholds the Law

“But this could be dealt with in a rough empirical way by taking twice the standard error as a criterion for possible genuineness and three times the standard error for definite acceptance”. Harold Jeffreys(1) (p386)

This is the second of two posts on P-values. In the first, The Pathetic P-Value, I considered the relation of P-values to Laplace’s Bayesian formulation of induction, pointing out that that P-values, whilst they had a very different interpretation, were numerically very similar to a type of Bayesian posterior probability. In this one, I consider their relation or lack of it, to Harold Jeffreys’s radically different approach to significance testing. (An excellent account of the development of Jeffreys’s thought is given by Howie(2), which I recommend highly.)

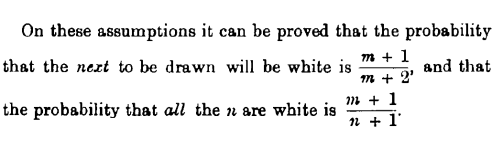

The story starts with Cambridge philosopher CD Broad (1887-1971), who in 1918 pointed to a difficulty with Laplace’s Law of Succession. Broad considers the problem of drawing counters from an urn containing n counters and supposes that all m drawn had been observed to be white. He now considers two very different questions, which have two very different probabilities and writes:

Note that in the case that only one counter remains we have n = m + 1 and the two probabilities are the same. However, if n > m+1 they are not the same and in particular if m is large but n is much larger, the first probability can approach 1 whilst the second remains small.

Note that in the case that only one counter remains we have n = m + 1 and the two probabilities are the same. However, if n > m+1 they are not the same and in particular if m is large but n is much larger, the first probability can approach 1 whilst the second remains small.

The practical implication of this just because Bayesian induction implies that a large sequence of successes (and no failures) supports belief that the next trial will be a success, it does not follow that one should believe that all future trials will be so. This distinction is often misunderstood. This is The Economist getting it wrong in September 2000

The canonical example is to imagine that a precocious newborn observes his first sunset, and wonders whether the sun will rise again or not. He assigns equal prior probabilities to both possible outcomes, and represents this by placing one white and one black marble into a bag. The following day, when the sun rises, the child places another white marble in the bag. The probability that a marble plucked randomly from the bag will be white (ie, the child’s degree of belief in future sunrises) has thus gone from a half to two-thirds. After sunrise the next day, the child adds another white marble, and the probability (and thus the degree of belief) goes from two-thirds to three-quarters. And so on. Gradually, the initial belief that the sun is just as likely as not to rise each morning is modified to become a near-certainty that the sun will always rise.

See Dicing with Death(3) (pp76-78).

The practical relevance of this is that scientific laws cannot be established by Laplacian induction. Jeffreys (1891-1989) puts it thus

Thus I may have seen 1 in 1000 of the ‘animals with feathers’ in England; on Laplace’s theory the probability of the proposition, ‘all animals with feathers have beaks’, would be about 1/1000. This does not correspond to my state of belief or anybody else’s. (P128)

Here Jeffreys is using Broad’s formula with the ratio of m to n of 1:1000.

To Harold Jeffreys the situation was unacceptable. Scientific laws had to be capable of being if not proved at least made more likely by a process of induction. The solution he found was to place a lump of probability on the simpler model that any particular scientific law would imply compared to some vaguer and more general alternative. In hypothesis testing terms we can say that Jeffreys moved from testing

H0A: θ ≤ 0,v, H1A: θ > 0 & H0B: θ ≥ 0,v, H1B: θ < 0

to testing

H0: θ = 0, v, H1: θ ≠ 0

As he put it

The essential feature is that we express ignorance of whether the new parameter is needed by taking half the prior probability for it as concentrated in the value indicated by the null hypothesis, and distributing the other half over the range possible.(1) (p249)

Now, the interesting thing is that in frequentist cases these two make very little difference. The P-value calculated in the second case is the same as that in the first, although its interpretation is slightly different. In the second case it is a sense exact since the null hypothesis is ‘simple’. In the first case it is a maximum since for a given statistic one calculates the probability of a result as extreme or more extreme as that observed for that value of the null hypothesis for which this probability is maximised.

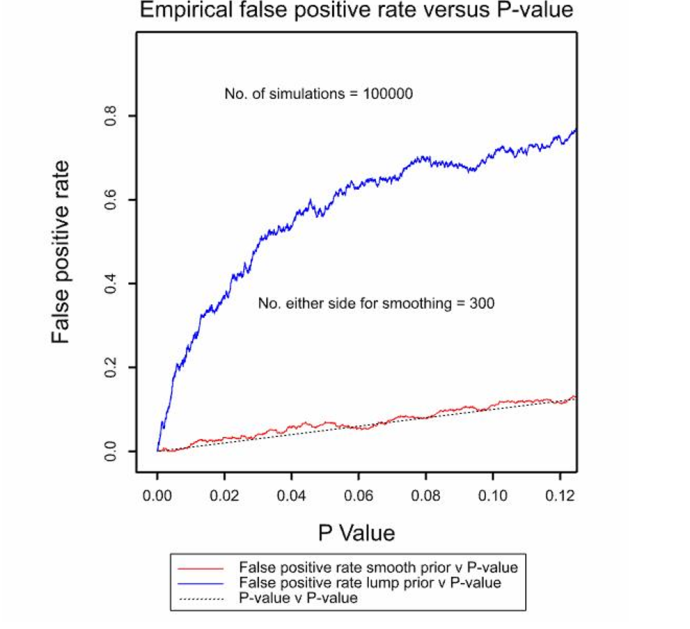

In the Bayesian case the answers are radically different as is shown by the attached figure, which gives one-sided P-values and posterior probabilities (calculated from a simulation for fun rather than by necessity) for smooth and lump prior distributions. If we allow that θ may vary smoothly over some range which is, in a sense, case 1 and is the Laplacian formulation, we get a very different result to allowing it to have a lump of probability at 0, which is the innovation of Jeffreys. The origin of the difference is to do with the prior probability. It may seem that we are still in the world of uninformative prior probabilities but this is far from so. In the Laplacian formulation every value of θ is equally likely. However in the Jeffreys formulation the value under the null is infinitely more likely than any other value. This fact is partly hidden by the approach. First make H0 & H1 equally likely. Then make every value under equally likely. The net result is that all values of θ are far from being equally likely.

This simple case is a paradigm for a genuine issue in Bayesian inference that arises over and over again. It is crucially important as to how you pick up the problem when specifying prior distributions. (Note that this is not in itself a criticism of the Bayesian approach. It is a warning that care is necessary.)

This simple case is a paradigm for a genuine issue in Bayesian inference that arises over and over again. It is crucially important as to how you pick up the problem when specifying prior distributions. (Note that this is not in itself a criticism of the Bayesian approach. It is a warning that care is necessary.)

Has Jeffreys’s innovation been influential? Yes and no. A lot of Bayesian work seems to get by without it. For example, an important paper on Bayesian Approaches to Randomized Trials by Spiegelhalter, Freedman and Parmar(4) and which has been cited more than 450 times according to Google Scholar (as of 7 May 2015), considers four types of smooth priors, reference, clinical, sceptical and enthusiastic, none of which involve a lump of probability.

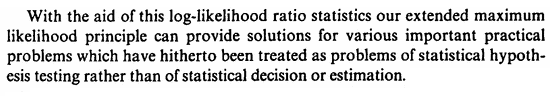

However, there is one particular area where an analogous approach is very common and that is model selection. The issue here is that if one just uses likelihood as a guide between models one will always prefer a more complex one to a simpler one. A practical likelihood based solution is to use a penalised form whereby the likelihood is handicapped by a function of the number of parameters. The most famous of these is the AIC criterion. It is sometimes maintained that this deals with a very different sort of problem to that addressed by hypothesis/significance testing but its originator, Akaike (1927-2009) (5), clearly did not think so, writing

So, it is clear that Akaike regarded this as being a unifying approach, to estimation and hypothesis testing: that which was primarily an estimation tool, likelihood, was now a model selection tool also.

So, it is clear that Akaike regarded this as being a unifying approach, to estimation and hypothesis testing: that which was primarily an estimation tool, likelihood, was now a model selection tool also.

However, as Murtaugh(6) points out, there is a strong similarity between using AIC and P-values to judge the adequacy of a model. The AIC criterion involves log-likelihood and this is what is also in involved in analysis of deviance where the fact that asymptotically minus twice the difference in log likelihoods between two nested models has a chi-square distribution with mean equal to the difference in the number of parameters modelled. The net result is that if you use AIC to choose between a simpler model and a more complex model within which it is nested and the more complex model has one extra parameter, choosing or rejecting the more complex values is equivalent to using a significance threshold of 16%.

For those who are used to the common charge levelled by, for example Berger and Sellke(7) and more recently, David Colquhoun (8) in his 2014 paper that the P-value approach gives significance too easily, this is a baffling result: significance tests are too conservative rather than being too liberal. Of course, Bayesians will prefer the BIC to the AIC and this means that there is a further influence of sample size on inference that is not captured by any function that depends on likelihood and number of parameters only. Nevertheless, it is hard to argue that, whatever advantages the AIC may have in terms of flexibility, for the purpose of comparing nested models, it somehow represents a more rigorous approach than significance testing.

However, it is easily understood if one appreciates the following. Within the Bayesian framework, in abandoning smooth priors for lump priors, it is also necessary to change the probability standard. (In fact I speculate that the 1 in 20 standard seemed reasonable partly because of the smooth prior.) In formulating the hypothesis-testing problem the way that he does, Jeffreys has already used up any preference for parsimony in terms of prior probability. Jeffreys made it quite clear that this was his view, stating

I maintain that the only ground that we can possibly have for not rejecting the simple law is that we believe that it is quite likely to be true (p119)

He then proceeds to express this in terms of a prior probability. Thus there can be no double jeopardy. A parsimony principle is used on the prior distribution. You can’t use it again on the posterior distribution. Once that is calculated, you should simply prefer the more probable model. The error that is made is not only to assume that P-values should be what they are not but that when one tries to interpret them in the way that one should not, the previous calibration survives.

It is as if in giving recommendations in dosing children one abandoned a formula based on age and adopted one based on weight but insisted on using the same number of kg one had used for years.

Error probabilities are not posterior probabilities. Certainly, there is much more to statistical analysis than P-values but they should be left alone rather than being deformed in some way to become second class Bayesian posterior probabilities.

ACKNOWLEDGEMENT

My research on inference for small populations is carried out in the framework of the IDEAL project http://www.ideal.rwth-aachen.de/ and supported by the European Union’s Seventh Framework Programme for research, technological development and demonstration under Grant Agreement no 602552.

REFERENCES

- Jeffreys H. Theory of Probability. Third ed. Oxford: Clarendon Press; 1961.

- Howie D. Interpreting Probability: Controversies and Developments in the Early Twentieth Century. Skyrms B, editor. Cambridge: Cambridge University Press; 2002. 262 p.

- Senn SJ. Dicing with Death. Cambridge: Cambridge University Press; 2003.

- Spiegelhalter DJ, Freedman LS, Parmar MKB. Bayesian Approaches to Randomized Trials. Journal of the Royal Statistical Society Series a-Statistics in Society. 1994;157:357-87.

- Akaike H. Information theory and an extension of the maximum likelihood principle. In: Petrov BN, Czáki F, editors. Second International Symposium on Information Theory. Budapest: Akademiai Kiadó; 1973. p. 267-81.

- Murtaugh PA. In defense of P values. Ecology. 2014;95(3):611-7.

- Berger, J. O. and Sellke, T. (1987). “Testing a point null hypothesis: The irreconcilability of p values and evidence,” (with discussion). J. Amer. Statist. Assoc. 82: 112–139.

- Colquhoun D. An investigation of the false discovery rate and the misinterpretation of p-values. Royal Society Open Science. 2014;1(3):140216.

Also Relevant

- Cassella G. and Berger, R.. (1987). “Reconciling Bayesian and Frequentist Evidence in the One-sided Testing Problem,” (with discussion). J. Amer. Statist. Assoc. 82 106–111, 123–139.

Related Posts

P-values overstate the evidence?

P-values versus posteriors (comedy hour)

Spanos: Recurring controversies about P-values and confidence intervals (paper in relation to Murtaugh)

Stephen: Thanks so much for this post! Deforming the P-value indeed. Every case i look at that is supposed to provide a way to stop “overstating” the evidence actually permits inferring alternatives that are much more discrepant from the null than p-values permit. These are maximally likely alternatives. They are tantamount to inferring alternatives that would be lower bounds of a confidence interval with .5 confidence level.

Stephen

This post is interesting to me because my day job used to be fitting models to data by maximum likelihood and trying to distinguish which model was closest to being correct.

We were fitting rate constants for transitions (elements of a transition rate matrix) between discrete states of single protein molecules (an aggregated Markov process in continuous time). The data consist of about 60,000 dwell times in two observable states of the molecule

Only once have we used AIC, or BIC, or likelihood ratios in trying to solve this problem. See page 10934 in http://www.onemol.org.uk/?page_id=10#vb2004

I didn’t like them because they tended to give very low P values for fits that were indistinguishable by eye. Also our models are not always nested, and often have parameters on the edge of the parameter space, so they don’t obey all the assumptions of these tests. In fact, in that paper, we ended up opting for a model that was a slightly worse fit, because it was much more plausible based on what we know about the physics of the system (our models are physical, not the sort of empirical models that are more usual in statistics).

At a more empirical level, the distributions of dwell times are often with mixtures of exponential distributions, but again AIC etc make no sense. The number of exponential components is dictated by the model (i.e. by the physical nature of the molecule). If you can convince yourself that three components are needed than you should fit three components every time, regardless of whether the third component produces a fit that is a “significant” (yuk!) improvement on two components.

Now let’s return now to the question of interpretation of a P value found in comparing two means. I’m perfectly happy with your demonstration that, with appropriate choice of priors, the P value does not exaggerate the strength of evidence against the null

All I can say is that my aim, as an experimenter, is to rule out the possibility that the two treatments are the same. That’s a point null and that’s what I want to test. To take the example used in my paper, I want to rule out the possibility that placebo and drug are identical (as would be the case, for example, if the “drug” was a 30C homeopathic pill). The fact that you can get a different answer by postulating an arbitrary, and less appropriate, prior is neither here nor there.

The fact that my results are similar not only to those of Sellke & Berger, but also to Valen Johnson’s nice approach using uniformly most-powerful Bayesian tests, seems to me to add to the strength of case that P values, as commonly used. do indeed exaggerate the strength of the evidence against the null.

The problem is, perhaps, that while you know how to interpret a P value, most people don’t.

I suspect (correct me if I’m wrong) that we’d both say that the pervasive practice of calling P = 0.047 “significant”, and P= 0.053 “non-significant” is nonsense. And that we’d both say that observing P = 0.047 means something like “worth another look”, not “yikes I’ve made a discovery”.

David: first let me apologize for your comment getting caught in a spam box for absolutely no reason I can fathom.

Actually both Johnson’s and Berger and Sellke’s (and many other similar attempts) overstate the evidence against the null. They consider one and two sided testing with an n-fold IID Normal sample, known variance. Null is mu = 0. When they say they’re being most fair they consider the max likely alternative H*, and give .5 to the null and to H*. Johnson’s is one-sided (positive) but the same holds for Berger and Sellke, with double the p-value. With p = .025 (one-sided), z = 1.96, the Bayes factor of the max likely alternative over the null is 6.84, and H* gets a posterior probability of .87! (6.84/7.84) Even though we’re never told what the posterior means, presumably that’s some kind of decent evidence for H*. However, that’s to infer an alternative value which, as a lower confidence bound, is at level .5.

In other words, when we just reach z = 1.96, the error statistical tester would merely infer there’s an indication of some discrepancy from the null. A weak claim. We could give a series of lower bounds decently indicated, e.g., at confidence levels .9, .8. But we’d never say there’s any kind of decent evidence that mu = m (where m is the observed sample mean).

By asking after a comparison–a non-exhaustive one–they are turning the hypothesis test into a completely different question–comparing the null to a max likely alternative– and assigning them spiked priors (.5 each) ignoring all the rest. Where’s the discrepancy report, by the way?

Finally, they overstate even further because selection effects, optional stopping, cherry picking etc. do not alter the assessment. (see my last rejected post https://errorstatistics.com/2015/05/08/what-really-defies-common-sense-msc-kvetch-on-rejected-posts/).

David, I note that you are both rational and Bayesian your practice:

“In fact, in that paper, we ended up opting for a model that was a slightly worse fit, because it was much more plausible based on what we know about the physics of the system (our models are physical, not the sort of empirical models that are more usual in statistics).”

😉

I think David and I agree as regards these points

1) P-values should not be interpreted as posterior probabilities

2) P=0.05 is a weak standard of evidence

3) Where the problem is sufficiently well-structured to allow you to do more you should do so and just relying on P-values to interepret an experiment is silly.

Where we disagree (I think) is as follows

4) I see no point in modifying P-values as proposed by Johnson. Do a Bayesian analysis if you want and put your prior on the table. But what David and Johnson seem to be proposing is neither fish nor fowl nor good red herring.

5) I disagree that Jeffreys lump priors are generally more appropriate than Laplace smooth ones but in fact I think that for most practical cases neither are appropriate

6) I disagree that 1/20 is an appropriate posterior probability to aim for. I don’t believe in Double jeopardy. If you get your prior distribution right (and I have published some papers showing it is hard [1-3]) then the best model is the one that is most likely. given the data (although your Bayseian inference should involve a model mixture).

Thanks Stephen. Now we are getting somewhere. It seems that we agree on the important questions.

So let’s deal with the remaining points of disagreement.

(4) I don’t think that anyone is saying that it’s possible to find a unique relationship between P values and false positive rates. Clearly there isn’t. But since the latter is what experimenters want to know, that immediately poses a problem for P values. Both Johnson and Berger suggest ways of dealing with the unknown prior, and although the methods are different, both come up with broadly similar results. And their conclusions are consistent with my simpler approach. The idea of uniformly-most-powerful Bayesian (UMPBT) tests seems like sensible approach to the problem. What’s wrong with it? I can’t do a “proper Bayesian analysis” because they can give any answer you want (see next point).

(5) The real disagreement seems to lie in our attitude to priors. The point prior seems to me, as experimenter, to be exactly what I want to test (admittedly that could be influenced by the fact that it’s what has been taught by generations of statisticians). As you have often pointed out, if you ask a subjective Bayesian how to analyse your experiment, you are likely to get as many answers as there are Bayesians. Insofar as that’s true, all they do is to bring statistics into disrepute (as people who can help you make sense of data). That’s why I have never found (subjective) Bayesian approaches to be of any practical value. And that’s why I find the approach via UMPBTs so interesting.

(6) “I disagree that 1/20 is an appropriate posterior probability to aim for.” That doesn’t seem to me to be a disagreement at all. I used 1/20 in my examples for obvious reasons, but you can choose any value you like.

You go on ” If you get your prior distribution right (and I have published some papers showing it is hard [1-3]) …”. While true, this is totally unhelpful in practice, because of the “If”.

The philosophical discussions are fascinating and ingenious, but if we agree that one job of statisticians is to help non-statisticians to make sensible decisions, it would be a real help if they they didn’t spend so much time squabbling with each other!

Stephen: I”m interested to see that the false positive rate is higher with the point priors in contrast to that of the P-value. Can you please explain how this happens, as against the claims by Ioannidis and others that the P-value gets high false positives. Doesn’t he use a point prior?

The false positive rate is that associated with using a particular threshold P-value against which it is plotted. This false positive rate will then depend on the prior distribution.

Imagine the case were you have a smooth very vague prior (perhaps a Normal distribution with very large variance) and you are testing a dividing hypothesis with negative values of the parameter forming the null (and perhaps the value zero also) and positive values forming the alternative. Suppose that the test statistic is positive. Now pick a given value of the parameter mu, mu’ under H1. The corresponding equally probable value a priori under H0 is -mu’. Since the statlstic is positive, the likelihood for mu’ is greater than the likelihood for -mu’, whatever value of mu’ you originally chose. Every value under H1 is given more support than the corresponding value under H0.

Now consider the same exercise for the point null. Now every value of mu’ under H1 is matched with a value zero chosen from the prior lump under H0 (there is an inexhaustible supply of such zeroes). Some of these values of mu under H1 are actually further away from the test statistic than is the value 0 under H0. Hence there are now many pairs of values (a particulare value mu’ and the value under H0 of 0) for which the likelihood actually favours the null. This is why the null now has much more support.

This is the origin of the difference and it is this point I was referring to in my first post on the subject (https://errorstatistics.com/2015/03/16/stephen-senn-the-pathetic-p-value-guest-post/ ), namely that the problem that Bayesians perceive with P-values is not necessarily a problem with P-values per se, it is a consequence of the senstivity that Bayesian inferences have to prior distributions. This sensitivity may be good or bad but that’s not my point. My points are that a) P-values don’t use prior distributions and b) the disagremment between Laplace type and Jeffreys type inferences will not go away by abandoning P-values.

Stephen:

> calculated from a simulation for fun rather than by necessity

Is it not time we stop being apologetic for “stooping to simulations” to make technical points in statistics clearer (to ourselves as well as) others?

Yes there is real value in understanding things analytically, but these are limited to unrealistically hopeful situations with no (or special) nuisance parameters – everything else is asymptotic (and here Charlie Geyer questions the cost effectiveness of analytic insight there.).

We both know and have caught others (even Phd statisticians) not actually understanding what is going on/happening in statistical analyses and arguments so why _disparage_ easier ways for them to understand? (I note you have never received any replies to your comments for the need to deal with nuisance parameters on this blog.)

Admittedly, it is a challenge to help people actually understand what is going on in simulation.

Here is an animation to help folks get how Bayesian analyses can be understood and undertaken as two stage direct simulation (using Galton’s 1885 two stage quincunx that he used to implement Bayesian analysis.) https://galtonbayesianmachine.shinyapps.io/GaltonBayesianMachine/

Keith O’Rourke

Nice posting, thanks.

A key issue for me here is always that I don’t believe any model to hold precisely. Particularly in model selection the issue to me never is to find the “correct” model but rather one that fits the purpose (there can be more than one that does it). In order to think about how to do it, the following questions always arise:

a) Is there really a problem to use a “too big” model given that parameters that we imagine to be zero (which they hardly are precisely – ignoring the fact that I don’t think there is anything like a “true parameter” in nature) will be estimated close to zero anyway, and will therefore not do much harm to prediction? (They may do harm under some circumstances though.)

b) On the other hand, how small a true parameter would we actually want to estimate to zero in the given situation, given that we do model selection for a purpose and there is probably a reason to be happy in most situations about not carrying around parameters of size 10^{-8} even if they were true?

All these considerations about what happens under the true H0 are of course informative and important, but still the discussion needs to acknowledge that ultimately there is no true model and we want to achieve other things than finding it.

I would like to add to the controversy by disagreeing with David and Stephen’s assertion that ‘P’ P-values should not be interpreted as posterior probabilities!. A one-sided ‘P value’ of 0.025 means that if we repeatedly selected N subjects from a population with a mean of Ho (the null hypothesis) then the probability of getting the study result (Sr) or something more extreme (i.e. which is outside the ‘replicated discovery range)’ is 0.025. But the converse is symmetrical. If we were to select N subjects from a population with a mean of Sr, then the probability of getting a result of Ho or something more extreme would also be 0.025. However, if Ho represented ‘no difference’, then a result of exactly Ho could hardly be regarded as replicating the observed ‘difference’ represented by the original result Sr. For example, if it was agreed that a difference of one SEM above Ho could be regarded as ‘inside the replicated discovery range’ (InRDR) then the one-sided false discovery rate (the probability of being ‘outside the replicated discovery range’ (OutRDR) based on the numerical result alone) would be about 0.158. The false discovery rate would depend on what was regarded as the range of results that could be regarded as replicating the study result Sr that had been ‘discovered’. In order to get a false discovery rate of 0.025, the edge of the ‘discovery range’ (e.g. Ho) would have to lie at 3SEMs below Sr.

.

This approach can be regarded as ‘sampling’ a future result based on N observations from all pooled past studies with a numerically identical result of Sr and distribution, based on an identical number N observations. This observed numerical result is only one ‘fact’ about the study however. Other information will also have to be taken into account usually. The Bayesian approach is to estimate subjectively the probability of a subsequent study result lying within some specified range (termed a credibility interval – in this case the range of results that would replicate the study result Sr), conditional on these combined facts but prior to seeing the study result Sr and therefore not including the study result Sr. These Bayesian ‘facts’ (Bf) would include what has been read about previous studies and meta-analyses, the reliability of the methods used, how well they were described, the possibility of unwitting biases and so on. The Bayesian would then multiply this prior conditional odds of p(OutRDR/Bf) / p(InRDR/Bf) by the likelihood ratio of p(Sr/OutRDR) / p(Sr/InRDR) to give the new estimated posterior odds of p(OutRDR/Bf∩Sr) / p(InRDR/Bf∩Sr). There may be an over-estimate here because of the assumption of statistical independence.

.

But we need to know the likelihood ratio of p(Sr/OutRDR) / p(Sr/InRDR). If we assume that the unconditional probabilities p(OutRDR) / p(InRDR) is ≤ 1, then

p(OutRDR/Sr) / p(InRDR/Sr) ≤ p(Sr/OutRDR) / p(Sr/InRDR).

(In other words the likelihood ratio is less than or equal to the odds. )

.

Therefore:

p(OutRDR/Bf∩Sr) / p(OutRDR/Bf∩Sr) ≤ [p(OutRDR/Bf) / p(InRDR/Bf)] x [p(OutRDR/Sr) / p(InRDR/Sr)]

.

An assumption of p(OutRDR) / p(InRDR) is ≈ 1 may tend to provide an underestimate whereas statistical independence might provide an over-estimate, both assumptions perhaps providing a fair estimate. (This can only be checked by calibrating the probabilities in some way of course.)

For example, if the p(InRDR/Sr) = 0.95 and p(InRDR/Bf) = 0.8 then p(InRDR/ Bf∩Sr) ≈

[1+ 0.05/0.95*0.2/0.8]-1 ≈ 0.987.

.

However, if the p(InRDR/Sr) = 0.95 and p(InRDR/Bf) = 0.5 then p(InRDR/ Bf∩Sr) ≈

[1+ 0.05/0.95*0.5/0.5]-1 ≈ 0.95 (so 1-P can be interpreted as a posterior probability).

.

And if p(InRDR/Sr) = 0.95 and p(InRDR/Bf) = 0.2 then p(InRDR/ Bf∩Sr) =

[1+ 0.05/0.95*0.8/0.2]-1 ≈ 0.826.

.

I have used this simple expression to combine a non-transparent subjective probability of a diagnosis with a transparent probability based on a calculation (see the first paragraph of http://blogs.bmj.com/bmj/2015/04/28/huw-llewelyn-the-way-forward-from-rubbish-to-real-ebm-in-the-wake-of-evidence-live-2015/). The only way of checking such ‘estimated’ probabilities based on un-testable assumptions (including Bayesian probabilities,) is to calibrate them in some way of course.

My apologies for two inadvertent insertions. Please regard as deleted ‘P’ in line 2; and in lines 6 and 7 regard as deleted ‘(i.e. which is outside the ‘replicated discovery range’)’

Well said Christian,

Applied statistical efforts undertake to identify useful models that approximate what we see in the world. Often there are several candidates that do a reasonable job of mimicking real world measured data.

Fisher and others a hundred years ago were developing methods for people faced with a few dozen observations, and in that scenario, the infamous “p < 0.05" evolved, as no one had a calculator or computer with which to calculate p-values so that tables of critical values were valuable tools. Generating a table of critical values for the F distribution, or the Student's t distribution took many people many days or weeks to compile.

So David Colquhoun's example of a data set of 60,000 dwell times is a modern data set for which the old p < 0.05 small data set paradigm is of course inappropriate. Of course with 60,000 data points, almost any comparison of differing models or parameter values will be associated with small p-values. Comparing those p-values to the small sample 100 year old paradigm of alpha = 0.05 critical value tables is inappropriate. But the p-values themselves, small though they may be, can still be scrutinized to rank competing models, just as can AIC or BIC or any number of other statistics.

Colquhoun is correct to entertain models that also comport with the physical characteristics of the system under study, because of course the idea is to find a representative model, not just find p-values below some threshold.

The issue now is that we have computers, and can evaluate huge sets of data, and thus need new analytical paradigms for guiding reasonable model choices and other statistical decision making efforts.

I see nothing pathetic about a p-value – it is just a statistic with some very interesting distributional properties under various scenarios. What is pathetic is shoe-horning an analysis situation (e.g. modern data sets of thousands or millions of values) into an old paradigm developed for use with smaller data sets and then decrying the silly outcomes that ensue. That's poor and fallacious philosophical practice. (I'm not pointing a finger at anyone here, or meaning to disparage anyone, just using materials in this blog post as illustration.)

The reason this blog, and Mayo's current philosophical efforts are so valuable is that we need to develop new paradigms for new data scenarios. The p < 0.05 paradigm was developed through years of philosophical debate in the small data set era of the early 20th century and served well then. We need renewed philosophical debate to develop reasonable decision making and model fitting paradigms in this era of huge amounts of data where some scenarios yield data with parameter space dimensionality far larger than the data space dimensionality and so on. Bradley Efron's paper "Scales of Evidence for Model Selection: Fisher versus Jeffreys" is one such valuable excursion down this pathway – more such philosophical debate is needed to yield reasonable model selection paradigms in this big data era. Mayo's Severe Testing concepts are also valuable, and will involve different measures or rule sets for data sets from such different dimensionalities.

The differences in performance seen between some Bayesian and other Error Statistical approaches points to the need to reassess analytical paradigms and develop new ones for modern problems, not vilify a particular statistic that served well in prior times and still does, in appropriate scenarios, today. Long live the p-value!

We may have 60,000 dwell times, but they have a huge variance. They are distributed as a mixture of exponential distributions and the means of the individual exponentials may cover the range from a few microseconds up to a few seconds. 60,000 is not as big as it sounds.

Christian Hennig says that “The issue to me never is to find the “correct” model but rather one that fits the purpose.” Steven McKinney concurs that “The differences in performance seen … between … approaches points to the need to develop new ones for modern problems”. One modern problem is to get people from different disciplines to understand statistics by relating it to their own educational background and experience.

.

My concepts of statistics are coloured by my background as a physician. My concept of sampling is not an attempt to use a set of observations to estimate the parameters of a larger population (something that interested R. A. Fisher when using a small sampling frame to estimate plant proportions in a large field). Instead, my inclination is to assume that the next patient can be regarded as an element of the set of past patients. For example if there are 9 patients with central crushing chest pain and 6 have angina, the probability of one of these 9 patients in the set having angina is 0.67. However if a 10th patients arrives into the set with central chest pain, then the probability of that patient in the new set with an extra element having angina is either 6/10 or 7/10 (depending on what is eventually found). So the set allows me to measure the probability much in the same way as a ruler graduated in millimetres allows me to measure a length of ≥6/10cm to <7/10cm. The pair of values also allows me to know the original proportion in order make other calculations. A larger set would provide a more precise probability, where the interval between the upper and lower value approaches zero.

.

I would also regard an observation of 6/9 as an element of the set of all 6/9 observations and the probability of getting another element of 6/9 being estimated from the binomial distribution of 9 selections from a large population with a proportion of 0.667 (or 0.6 to 0.7 to provide a ‘sensitivity analysis’). The mathematical skills and innovations required to take things forward from this different sampling point of view are exactly the same as those used for other sampling concepts.

.

In medicine, we also use probabilistic reasoning by elimination based on an ‘expanded’ form of Bayes rule termed by me ‘the probabilistic elimination theorem’ (see: http://blog.oup.com/2013/09/medical-diagnosis-reasoning-probable-elimination/).

Huw:

Appears interesting and I did locate this http://www.clinsci.org/cs/057/cs0570477.htm , though even that behind a pay wall.

I does remind me of discussions with clinical researchers evaluating the QMR artificial intelligent diagnostic program in the 1980s.

In trying to communicate statistical logic to non-statisticians, including say Ian Hacking when I was in Toronto or Iain Chalmers in Oxford, its almost never actually successful.

Not sure how what you are talking to is related to what I am trying to animate here https://galtonbayesianmachine.shinyapps.io/GaltonBayesianMachine/

That is completely free and we can discuss it openly here (assuming OK with blog owner).

Keith O’Rourke

OK by me.

Thank you for your interest.

My problem is that I find it impossible to come to a logical conclusion if it involves unknowable things, e.g. hypothetical populations, angels on the heads of pins, etc.. In the same vein, I feel very uncomfortable about the definition of the ‘P’ value as “the probability of a ‘real’ observed result, OR something hypothetical that is more extreme, conditional upon another hypothetical observation (the null hypothesis)”. As Stephen Senn has pointed out elsewhere, this is meaningless.

I have never accepted this as a reasonable concept and I was not forced to accept it in order to pass any examinations as a young person so that it never became a fixed part of my system of ideas. Instead, I have regarded ‘P’ as being equal to the probability of non-replication of a study conditional ONLY on the ‘fact’ of the numerical result of the study. However, the non-replication (or replication) of the entire study depends also on other ‘conditional’ facts such as how well the work was done, other similar study results etc.

I think that to regard a study result as a sample drawn from a unknowable pool of other study results is impossibly obscure and makes those not trained as statisticians feel that they can never understand the subject. However, this is such an established part of statistics that I cannot seeing it being dropped, with the result that statisticians will be condemned to a purgatory of endless unresolvable arguments and being misunderstood for their troubles.

A closely related problem is applying ‘specificity’ and ‘false positive rates’ to diagnosis. One can define ‘sensitivity’ easily enough: the frequency of a ‘finding’ in those with a ‘diagnostic criterion’ (accepting of course that there may be ranges of severity of the finding and diagnosis). However, ‘those without the diagnostic criterion’ are very difficult to define and the ‘specificity’ will vary enormously depending on the populations in which they are measured and applied, being mostly a function of the prevalence of those with the diagnosis in the study population.

In medicine we reason by probabilistic elimination using ‘differential diagnoses’ that CAN be defined. I explain all this with a mathematical proof in Chapter 13 of the Oxford Handbook of Clinical Diagnosis (pp 615 to 642 – see ‘look inside’ on Amazon: http://www.amazon.co.uk/Handbook-Clinical-Diagnosis-Medical-Handbooks/dp/019967986X#reader_019967986X). I will send a personal (i.e. not to be copied again) PDF of Chapter 15 by email on request to me at hul2@aber.ac.uk.

Jumping in in the middle of this, I don’t see the null hypothesis as any kind of ‘hypothetical observation’ at all (even putting aside that it’s not an observation). In fact P-value reasoning strikes me as the kind of ordinary, day to day counterfactual reasoning people (some of us?) engage in. The Texas sharpshooter may only have shot these bullets and drawn bull’s eyes around them–never to be repeated– maintaining it is evidence he’s a good shot. The source of the bull’s eyes was not his marksmanship, and I can argue he would have had so impressively many bull’s eyes using this technique, even with no ability. The probability of this procedure informing me he’s a lousy shot by yielding lousy results, is low, whereas we want it to be high. In particular 1 – P-value.

On the other hand, viewing a study as drawn from a pool of studies is obscure. That is why I regard transplanting the use of specificity and sensitivity from screening to scientific inference as problematic. This was discussed in the discussion of the ‘pathetic p-value’ post prior to this one.

Huw:

Thanks for the email, I will contact you.

> I think that to regard a study result as a sample drawn from a unknowable pool of other study results is impossibly obscure and makes those not trained as statisticians feel that they can never understand the subject.

I certainly learned this from a group of about 20 Epidemiologists in a webinar I gave, the left hand machine in my animation which represents what actually happened in nature with all but the observed sample being unknowable was incomprehensible to all 20 even with repeated one to one email exchanges with the most interested. Those with statistical training (another webinar) seemed to have little difficulty here (some are a bit shocked about not needing any math.)

Its like for most people, known unkowns are dealt with by asking an expert who knows and unknown unknowns are sillier than angels dancing on the head of a pin.

Keith O’Rourke

Thank you both for raising these very useful points.

.

My concern about the ‘P’ value is not that it conditional upon a hypothetical fact (which is no different to a ‘sufficient’ criterion of a suspected diagnosis when describing a ‘likelihood’) but that it also partly predicts another hypothetical fact (i.e. also something more extreme than what has actually been observed). The other problem is that this definition of a ‘P’ value is not a proper likelihood and it cannot be used in a Bayesian calculation.

.

My understanding is that in Bayesian terms we have to imagine all the possible unknown outcomes and their unknown distribution if the study were repeated with all its faults using a large number of observations, giving us a continuous distribution of outcome results (one of which by the way could be chosen as a ‘null hypothesis’). We then have to estimate the small prior probabilities of getting each result in the distribution conditional on all the ‘prior’ facts about the study except the study result (which why the other facts are ‘prior’). For each possible outcome result, we then have to calculate (by using the binomial distribution if the result is a proportion) the likelihood of the actual observed result e.g. 6/9 (i.e. NOT the actual observed result of 6/9 OR some ‘hypothetical observation’ that is more extreme but that was not observed e.g. 7/9 or 8/9 or 9/9). We then use this to calculate a posterior probability of each possible outcome. We then choose a range of values called the credibility interval and sum the small probabilities inside this interval to get the probability of replicating the study with a result inside the interval (NOT replicating it for the range outside the interval).

.

The disadvantage of all this is that it is dependent from the beginning on the non-transparent ‘prior belief’ or ‘prejudice’ of a person who may have a conflict of interest in what the study result should imply (e.g. because of commercial gain from the sales of a new drug or the headache of having to fund it from a limited budget and other competing resources). It is this contentious aspect and lack of transparency about how the ‘prior subjective probability’ was estimated that I find obscure and undefined. It is this that can lead to un-resolvable disagreement about the prior probabilities of the distribution of study outcomes. I gather that if a Bayesian is ‘indifferent’ about the outcome prior probabilities and that the distribution is therefore uniform then the probability of non-replication for a ‘credibility interval’ beyond Ho is always equal to ‘P’. Is there a general proof for this interesting result?

.

The advantage of re-interpreting the ‘P’ value as the probability of non-replication given the numerical result of the study alone, using the same number of observations, is that it gives a simple, ‘objective’ preliminary indication of the probability of replication before other more arguable facts are taken into account. (‘Objective’ does not means it is correct, but that the same result will be found by different operators who make the same assumptions.) If this preliminary probability of non-replication is not low (e.g. <0.05), then the attempt to show a high probability of replication (or low probability of non-replication) of the study will have failed at the first hurdle. If it clears the first hurdle, then the probability of replication given all the facts can be estimated subsequently by using Bayesian methods. This preliminary probability can also be incorporated into a more transparent type of reasoning by probabilistic elimination. Here, the different causes of non-replication (e.g. data-dredging, multiple sub-group analyses, vague details of patient selection, etc.) are hopefully shown one by one to be improbable by a more transparent reasoning process. Again, if one of these ‘causes’ cannot be shown to be improbable, the attempt to show a high probability of replication fails at that hurdle. This approach also depends on ‘subjective’ likelihoods or probabilities but I think that it would be an improvement on simply asserting a prior probability of replication given the other facts about the study, without addressing each fact in turn in a transparent way.

Regarding your last point that “viewing a study as drawn from a pool of studies is obscure”, perhaps I should try to state it in a clearer way and to give a concrete example. A particular study result of 6/9 would be an element of the set of all study results of where p(X) = 6/9. If we chose 9 of these ‘p(X) = 6/9’ studies at random and observed the nature of next (10th) outcome (either X or ‘Not X’) for each set of 6/9, the possible proportions with ‘X’ would be 0/9 or 1/9, … or 6/9 … or 9/9. If we were to choose such 9 studies many times over, the proportion of the time would we get 0/9 or 1/9, … or 6/9 .. or 9/9 would be determined by the binomial distribution calculated using p = 0.667 (or Laplace’s p = (1+6)/(2+9) or ‘p’ with a lower bound of 0.6 and an upper bound of 0.7 by definition of a ‘mathematical probability’). In contrast to this, a Bayesian probability is an attempt to guess an unknown distribution with an infinitely (and thus unknown) large number of data points. Now I accept that all these are ’thought experiments’, being dependent on the nature of a mathematical model and various assumptions. What I propose as the set of sets with ‘p(X) = a/b’ is very easy for me to picture and not at all obscure. It also allows me to get a similar result to the ‘P’ value when I use it to calculate the probability of non-replication of a study given its numerical result alone, e.g. 6/9. Irrespective of all this I can also choose 'subjectively' to regard the ‘P’ value as being logically equivalent to the probability of non-replication of the ‘null hypothesis’ or something more extreme, conditional on the numerical result and number of observations in a study.

.

My objective is to try make it easier for statisticians, doctors, scientists to communicate better by using different analogies / models that are easier to share.

No P-values do not “partly predicts another hypothetical fact (i.e. also something more extreme than what has actually been observed). The other problem is that this definition of a ‘P’ value is not a proper likelihood and it cannot be used in a Bayesian calculation.”

We don’t want a “proper likelihood” or likelihood ratio alone because likelihood ratios fail to control error probabilities and fail to take account of the very gambits you list as leading to non replicability. Given how many points are confused, following the rule on this blog, I’ll just direct you to published work:

Click to access Error_Statistics_2011.pdf

Thank you for your comments and suggestions. I will read the above paper.

I have read the paper. It is written in the light of traditional statistical concepts. My wish is to interpret traditional statistical concepts in terms of the basic principles of reasoning in my own discipline of medicine. If I suspect an error in the facts reported to me about a patient then I will try to check them by re-examining the same phenomenon (e.g. a preserved x-ray image) or repeating the examination (e.g. doing another x-ray). If I confirm the reported fact using the original x-ray, then the report is ‘corroborated’. If I confirm it on a repeat x-ray, then it is ‘replicated’. Before confirming these facts I can predict the probability of corroboration or replication based on other facts prior to my corroborating or replicating observation. These will include the clarity of the description that I was given, the training of the person reporting it, the degree of the phenomenon (minimal findings often being difficult to report, gross or severe changes being easier), etc.

.

Many scientific studies involve multiple observations that are summarised by a proportion or an average as opposed to a single observation as in the above example. In order to interpret statistical calculations in terms of the concepts of replication used in medicine, one has to take a different path to the one familiar to statisticians. This can be done by recognising that the probability of an observation or something more extreme given a null hypothesis is equal to the probability of the same null hypothesis or something more extreme given the same observation. The rest follows as shown in my previous comments. David Colquhoun wishes to interpret statistical concepts from the viewpoint of a biological scientist. It is interesting to note that DC’s estimation of the ‘false discovery rate’, my ‘probability of non-replication’ and Stephen Senn’s ‘posterior probability’ are all different to the ‘P’ value. Our paths may converge after starting from different viewpoints.

Huw: “…recognising that the probability of an observation or something more extreme given a null hypothesis is equal to the probability of the same null hypothesis or something more extreme given the same observation.”. Do you mean this literally, or to say probability is equal if we reverse the null hypothesis value and the observation value? Specifically, do you mean to assign a probability to a null hypothesis itself, not an observed value?

John+

Assume that 30% more patients improved on treatment than placebo in a study, assume that the null hypothesis was that the difference was 0%, and the ‘P’ value was 0.01. This means that if the true difference was 0%, then the probability of getting by chance a difference of 30% or greater was 0.01. It also means that if the true difference was 30% then the probability of getting by chance a difference of 0% or less (i.e. when more responded to placebo than treatment) was also 0.01.

If we regard the study result as an element of the set of all study results with a 30% difference and the same number ‘N’ of subjects, and all these ‘30%’ studies were repeated with the same number ‘N’ of subjects (NOT an infinite number of subjects) the proportion in repeat studies showing by chance a 0% difference or less would be 1% and the probability of any one of the studies, including the one above, showing a difference of 0% of less would be 0.01. In other words the probability of replication with ‘N’ subjects when the difference was greater than 0% would be 1 – 0.01 = 0.99.

Now this probability of 0.99 is only based on the two items of conditional evidence: (1) the observed difference in proportions was 30% and (2) the number of subjects was ‘N’. However, if we took other information into account, the subsequent probability of replication given the above two items of information AND other conditional evidence (e.g. poor description of the subjects and methods used) may be lower. I would suggest that this estimate of the probability is carried out in a transparent way using the probabilistic elimination theorem (e.g. see: http://blog.oup.com/2013/09/medical-diagnosis-reasoning-probable-elimination/ ). Alternatively the probability of replication based on an infinite number of subjects could be made in a ‘non-transparent’ way using a Bayesian approach where the conditional evidence is not stated explicitly but incorporated into an over-arching ‘prior probability’.

Huw: “It also means that if the true difference was 30% then the probability of getting by chance a difference of 0% or less (i.e. when more responded to placebo than treatment) was also 0.01.” I think it is not quite that simple. IF we conduct a second test with null at ∆=30% AND same variance AND same distribution assumptions met, then this p=0.01 would be right. There is a similar issue when speaking of the group of all results with ∆=30%. Such a group could include results of experiments with wildly varying circumstances, such as highly divergent variances. Are you making implicit assumptions about these things?

Yes, I was assuming implicitly that the variance and distribution assumptions were the same because ‘P’ values are calculated (e.g. by using the Gaussian distribution model) by actually assuming that the distribution around the UNobserved null e.g. 0% is identical to the estimated distribution around the observed result e.g. 30%. I am also assuming that the observed study result is an element of the set of all study results with the same mean and distribution (estimated using the same assumptions). Sorry I should have been more explicit. However, for proportions, if we base the calculations on the binomial distribution, the probability of non-replication will be slightly different usually from the ‘P’ value, but very similar.

John: It is much worse than that because with proportions the variance is a function of the mean (proportion).

Here there are two parameters say Pc and Pt with Pt – Pc = .3. Now Pc is the nuisance parameter with Pt – Pc the interest parameter. It is well known to those who have studied statistical theory that any measure of repeated performance (e.g. p_value) will be to some degree affected by the unknown Pc.

Some folks treat this p_value as not a value but a function of Pc and produce nice plots to make this clear. But few have the skills and determination to study the theory of statistics (it requires a sound grasp of advanced mathematics.)

That was the point of my post above “Yes there is real value in understanding things analytically, but these are limited to unrealistically hopeful situations with no (or special) nuisance parameters” and the animation is a start to how to _see_ the theory without any university level math. (I have yet had a chance to carefully read Huw’s chapter, he seems to be rediscovering a variation of Don Fraser’s variation of fudical probability which fails for finite/discrete outcomes.)

(Here the challenge is those who get the math don’t need such devices and sometimes are even derogatory of them and those who don’t get the math don’t see the point.)

Sorry, I should get rid of the alias phaneron0

Keith O’Rourke

If the ‘P’ value is calculated by assuming that the nature of the distribution and its variance around the null hypothesis is identical to that around the observed result, then the probability of non-replication of the ‘null hypothesis’ and beyond, conditional on the observed result will be exactly the same as ‘P’. There will of course be varying differences otherwise.

The point is that scientists and doctors (the ‘customers’) are interested in the probability of replication given specified conditional evidence. This evidence not only includes the numerical values (number of measurements, proportions, means and distributions) but other facts too that have a bearing on replication, such as study design.

The mathematical skills needed to estimate the probability of replication conditional on the numerical values alone are the same as those used to calculate ‘P’ values etc. However, statisticians may understandably prefer to continue with their established ways of working by continuing to estimate ‘P’ values, etc. I think that this would be fine because in many cases the calculation will be identical and in others lead to a good approximation.

By the way, Chapter 13 of the Oxford Handbook of Clinical Diagnosis addresses the probability models used in the ‘transparent’ diagnostic reasoning process and only touches on ‘replication’, ’P’ values etc. Their role of probabilistic reasoning by elimination in replication is alluded to in: http://blog.oup.com/2013/09/medical-diagnosis-reasoning-probable-elimination/.

Huw:

I agree that if you form a reference set of studies based on have identical observed features, the underlying unknown parameters that generated the data will have a distribution – but I don’t see how you would know what that distribution is, other than assuming you do know.

Now in randomized clinical trials, it is conventional to test for differences in baseline covariates that could not have been affected by the treatment. Almost every RCT publication has these.

Now form a reference set of those. Not all studies will have done the randomization properly but those that did, the true difference parameter will be practically NULL. Say 50% did it properly – then 50% of your reference set of studies were generated with the NULL being true. Not symmetric around the NULL or the observed.

Keith O’Rourke

That is why frequentist priors are so rare….

But I am not concerned about the past generations of distributions that generated the identical observed distributions in my set of elements with identical study results. I am concerned only with the new set of which my study is a member. That set will have the same mean and distribution as each identical element (i.e. each study with an identical result).

The baseline covariates will form a subset of identical study results and if a correction is to be made, then our study will be a member of the subset with the same covariate corrections with its associated assumptions. If there was evidence that this correction may not have been done properly in our study, then that evidence will become part of the conditional evidence for adjusting the probability of the nest result falling within a replication interval (RI).

I envisage no ‘unconditional’ priors n the Bayesian fashion where the evidence is not specified (e.g. p(RI) or p(RI/?). The probability statements should be conditional on the evidence used (e.g. p(RI/E1∩E2∩E3∩…∩En)) such as the mean, variance, covariates, racial mix of patients etc.). (Note that each item of evidence creates a new subset.) I envisage that the initial probabilities would be ‘objective’ and based on well recognised statistical assumptions and calculations, but subsequent evidence will be more subjective but at least, the reasoning should be transparent.

The choice of replication interval is crucial of course. If the interval is ‘all results the same or more extreme than the one observed, then p(RI/E) will about 0.5. This is why about 50% of repeated studies will result in P being less significant and 50% more significant than during the original study, as we were reminded by Stephen Senn in his recent talk. On a related humorous note, a health minister recently expressed his dismay that 50% of care fell below average! However, most people would regard barely more than 0% difference as not replicating a study result that showed a substantial difference. If we expected a difference of at least one SEM more than ‘no difference’, when a one-sided P = 0.025 then p(Ṝḹ/E) would be about 0.16 and the p(RI/E) would be about 1 – 0.16 = 0.84.

I would like to add a post-script. The probability of 0.5 that ‘another study will give the same result or a result more extreme than the one observed’ is equivalent to the ‘power’ of the study and its sample size being 50%. It always the case of course, that that repeating a study with the same sample size will always have a power of 50% for getting the same P value or one more significant. So in terms of replication, power and sample size calculations, we have to envisage a hypothetical (1st) study result where the sample size is large enough to give a typical probability of replication of 0.8 that the planned (2nd real) study will provide a result where P ≤0.05 (or a probability of at least minimal replication of ≥0.95 for another subsequent (3rd) study).

I’ll confess that I was quite worried when I saw the result in your Figure, so I started to try to replicate it. I soon realised that your simulations address a quite different problem from mine. In yours, the true effect differs from one simulated test to the next, being sampled from the prior. That’s not sensible for answering my question, which is how do you interpret the result of a single test that produces (say) P=0.047.

It might help if you included an illustration of the form of your priors -it’s on slide 20 at http://www.slideshare.net/jemille6/senn-repligate?ref=https://errorstatistics.com/2015/05/24/from-our-philosophy-of-statistics-session-aps-2015-convention/

I can’t imagine what the justification would be for believing in those priors. In any case, the fact remains that if you follow the advice in all elementary textbooks, and do t tests, you’ll get a false positive rate of around 30% for a prior prevalence of 0.5, Anything less than 0.5 gives you an even higher false positive rate.

Of course it’s true that if the prior (prevalence) approaches 1, the false positive rate approaches zero. It does so, because you’ve assumed in advance that you’re right. Any prior bigger than 0.5 would be totally unacceptable to any sane journal editor (unless it was backed by very good evidence).

Just a little provocation:

The definition for the conditional probability is similar to the definition of division: let x be the number such that

P(A & B) = P(B) * x

As 0<= P(A & B)<= P(B)<= 1, this value x is always a well-defined number between 0 and 1. We can understand this number x as a value of a function of the events A and B, x = f(A,B), since this number varies with the events A and B.

It is possible to show that this function f(A,B) is a probability measure over the first argument, when B is fixed: that is f(.,B) is a probability measure: f(empty, B) = 0, f(Omega, B) = 1 and if C and D are disjoint sets, then f(C or D, B) = f(C,B) + f(D,B). Define P(A|B) = f(A,B).

That is, the interpretation for P(A|B) as "the probability of A given that the event B has occurred" seems to be fictional, since P(A|B) is just a number such that P(A & B) = P(B)*P(A|B).

All the best,

Alexandre Patriota

As 0<= P(A & B)<= P(B) 0, this value of x is…

obs: (provided that the probability of B is strictly greater than 0)

The site does not accept the symbols for “provided that the probability of B is strictly greater than 0”

In this link my message is more readable: https://statmath.wordpress.com/2015/07/03/conditional-probabilties/

Pingback: "Why should anyone believe that? Why does it make sense to model a series of astronomical events as though they were spins of a roulette wheel in Vegas?" - Statistical Modeling, Causal Inference, and Social Science Statistical Modeling, Causal I

Pingback: Why p values can’t tell you what you need to know and what to do about it – DC's Improbable Science