Stephen Senn

Head of Competence Center for Methodology and Statistics (CCMS)

Luxembourg Institute of Health

Double Jeopardy?: Judge Jeffreys Upholds the Law*[4]

“But this could be dealt with in a rough empirical way by taking twice the standard error as a criterion for possible genuineness and three times the standard error for definite acceptance”. Harold Jeffreys(1) (p386)

This is the second of two posts on P-values. In the first, The Pathetic P-Value, I considered the relation of P-values to Laplace’s Bayesian formulation of induction, pointing out that that P-values, whilst they had a very different interpretation, were numerically very similar to a type of Bayesian posterior probability. In this one, I consider their relation or lack of it, to Harold Jeffreys’s radically different approach to significance testing. (An excellent account of the development of Jeffreys’s thought is given by Howie(2), which I recommend highly.)

The story starts with Cambridge philosopher CD Broad (1887-1971), who in 1918 pointed to a difficulty with Laplace’s Law of Succession. Broad considers the problem of drawing counters from an urn containing n counters and supposes that all m drawn had been observed to be white. He now considers two very different questions, which have two very different probabilities and writes:

Note that in the case that only one counter remains we have n = m + 1 and the two probabilities are the same. However, if n > m+1 they are not the same and in particular if m is large but n is much larger, the first probability can approach 1 whilst the second remains small.

Note that in the case that only one counter remains we have n = m + 1 and the two probabilities are the same. However, if n > m+1 they are not the same and in particular if m is large but n is much larger, the first probability can approach 1 whilst the second remains small.

The practical implication of this just because Bayesian induction implies that a large sequence of successes (and no failures) supports belief that the next trial will be a success, it does not follow that one should believe that all future trials will be so. This distinction is often misunderstood. This is The Economist getting it wrong in September 2000

The canonical example is to imagine that a precocious newborn observes his first sunset, and wonders whether the sun will rise again or not. He assigns equal prior probabilities to both possible outcomes, and represents this by placing one white and one black marble into a bag. The following day, when the sun rises, the child places another white marble in the bag. The probability that a marble plucked randomly from the bag will be white (ie, the child’s degree of belief in future sunrises) has thus gone from a half to two-thirds. After sunrise the next day, the child adds another white marble, and the probability (and thus the degree of belief) goes from two-thirds to three-quarters. And so on. Gradually, the initial belief that the sun is just as likely as not to rise each morning is modified to become a near-certainty that the sun will always rise.

See Dicing with Death(3) (pp76-78).

The practical relevance of this is that scientific laws cannot be established by Laplacian induction. Jeffreys (1891-1989) puts it thus

Thus I may have seen 1 in 1000 of the ‘animals with feathers’ in England; on Laplace’s theory the probability of the proposition, ‘all animals with feathers have beaks’, would be about 1/1000. This does not correspond to my state of belief or anybody else’s. (P128)

Here Jeffreys is using Broad’s formula with the ratio of m to n of 1:1000.

To Harold Jeffreys the situation was unacceptable. Scientific laws had to be capable of being if not proved at least made more likely by a process of induction. The solution he found was to place a lump of probability on the simpler model that any particular scientific law would imply compared to some vaguer and more general alternative. In hypothesis testing terms we can say that Jeffreys moved from testing

H0A: θ ≤ 0,v, H1A: θ > 0 & H0B: θ ≥ 0,v, H1B: θ < 0

to testing

H0: θ = 0, v, H1: θ ≠ 0

As he put it

The essential feature is that we express ignorance of whether the new parameter is needed by taking half the prior probability for it as concentrated in the value indicated by the null hypothesis, and distributing the other half over the range possible.(1) (p249)

Now, the interesting thing is that in frequentist cases these two make very little difference. The P-value calculated in the second case is the same as that in the first, although its interpretation is slightly different. In the second case it is a sense exact since the null hypothesis is ‘simple’. In the first case it is a maximum since for a given statistic one calculates the probability of a result as extreme or more extreme as that observed for that value of the null hypothesis for which this probability is maximised.

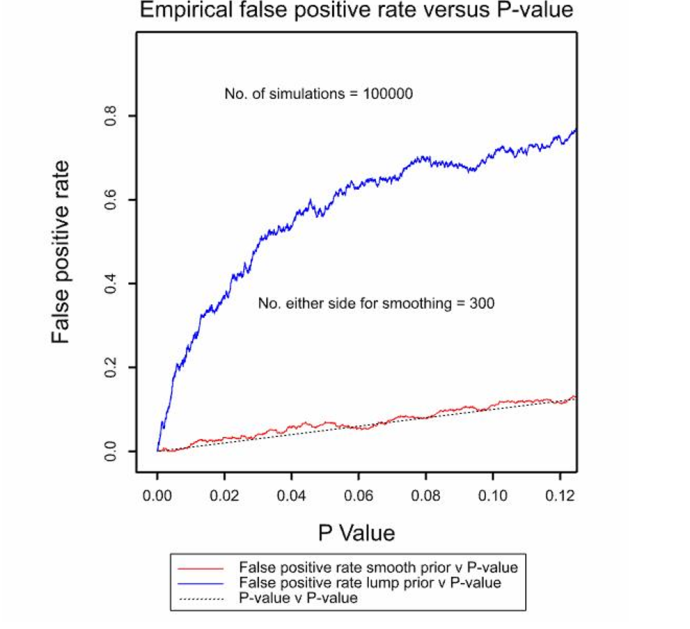

In the Bayesian case the answers are radically different as is shown by the attached figure, which gives one-sided P-values and posterior probabilities (calculated from a simulation for fun rather than by necessity) for smooth and lump prior distributions. If we allow that θ may vary smoothly over some range which is, in a sense, case 1 and is the Laplacian formulation, we get a very different result to allowing it to have a lump of probability at 0, which is the innovation of Jeffreys. The origin of the difference is to do with the prior probability. It may seem that we are still in the world of uninformative prior probabilities but this is far from so. In the Laplacian formulation every value of θ is equally likely. However in the Jeffreys formulation the value under the null is infinitely more likely than any other value. This fact is partly hidden by the approach. First make H0 & H1 equally likely. Then make every value under equally likely. The net result is that all values of θ are far from being equally likely.

This simple case is a paradigm for a genuine issue in Bayesian inference that arises over and over again. It is crucially important as to how you pick up the problem when specifying prior distributions. (Note that this is not in itself a criticism of the Bayesian approach. It is a warning that care is necessary.)

This simple case is a paradigm for a genuine issue in Bayesian inference that arises over and over again. It is crucially important as to how you pick up the problem when specifying prior distributions. (Note that this is not in itself a criticism of the Bayesian approach. It is a warning that care is necessary.)

Has Jeffreys’s innovation been influential? Yes and no. A lot of Bayesian work seems to get by without it. For example, an important paper on Bayesian Approaches to Randomized Trials by Spiegelhalter, Freedman and Parmar(4) and which has been cited more than 450 times according to Google Scholar (as of 7 May 2015), considers four types of smooth priors, reference, clinical, skeptical and enthusiastic, none of which involve a lump of probability.

However, there is one particular area where an analogous approach is very common and that is model selection. The issue here is that if one just uses likelihood as a guide between models one will always prefer a more complex one to a simpler one. A practical likelihood based solution is to use a penalised form whereby the likelihood is handicapped by a function of the number of parameters. The most famous of these is the AIC criterion. It is sometimes maintained that this deals with a very different sort of problem to that addressed by hypothesis/significance testing but its originator, Akaike (1927-2009) (5), clearly did not think so, writing

So, it is clear that Akaike regarded this as being a unifying approach, to estimation and hypothesis testing: that which was primarily an estimation tool, likelihood, was now a model selection tool also.

So, it is clear that Akaike regarded this as being a unifying approach, to estimation and hypothesis testing: that which was primarily an estimation tool, likelihood, was now a model selection tool also.

However, as Murtaugh(6) points out, there is a strong similarity between using AIC and P-values to judge the adequacy of a model. The AIC criterion involves log-likelihood and this is what is also in involved in analysis of deviance where the fact that asymptotically minus twice the difference in log likelihoods between two nested models has a chi-square distribution with mean equal to the difference in the number of parameters modelled. The net result is that if you use AIC to choose between a simpler model and a more complex model within which it is nested and the more complex model has one extra parameter, choosing or rejecting the more complex values is equivalent to using a significance threshold of 16%.

For those who are used to the common charge levelled by, for example Berger and Sellke(7) and more recently, David Colquhoun (8) in his 2014 paper that the P-value approach gives significance too easily, this is a baffling result: significance tests are too conservative rather than being too liberal. Of course, Bayesians will prefer the BIC to the AIC and this means that there is a further influence of sample size on inference that is not captured by any function that depends on likelihood and number of parameters only. Nevertheless, it is hard to argue that, whatever advantages the AIC may have in terms of flexibility, for the purpose of comparing nested models, it somehow represents a more rigorous approach than significance testing.

However, it is easily understood if one appreciates the following. Within the Bayesian framework, in abandoning smooth priors for lump priors, it is also necessary to change the probability standard. (In fact I speculate that the 1 in 20 standard seemed reasonable partly because of the smooth prior.) In formulating the hypothesis-testing problem the way that he does, Jeffreys has already used up any preference for parsimony in terms of prior probability. Jeffreys made it quite clear that this was his view, stating

I maintain that the only ground that we can possibly have for not rejecting the simple law is that we believe that it is quite likely to be true (p119)

He then proceeds to express this in terms of a prior probability. Thus there can be no double jeopardy. A parsimony principle is used on the prior distribution. You can’t use it again on the posterior distribution. Once that is calculated, you should simply prefer the more probable model. The error that is made is not only to assume that P-values should be what they are not but that when one tries to interpret them in the way that one should not, the previous calibration survives.

It is as if in giving recommendations in dosing children one abandoned a formula based on age and adopted one based on weight but insisted on using the same number of kg one had used for years.

Error probabilities are not posterior probabilities. Certainly, there is much more to statistical analysis than P-values but they should be left alone rather than being deformed in some way to become second class Bayesian posterior probabilities.

ACKNOWLEDGEMENT

My research on inference for small populations is carried out in the framework of the IDEAL project http://www.ideal.rwth-aachen.de/ and supported by the European Union’s Seventh Framework Programme for research, technological development and demonstration under Grant Agreement no 602552.

REFERENCES

- Jeffreys H. Theory of Probability. Third ed. Oxford: Clarendon Press; 1961.

- Howie D. Interpreting Probability: Controversies and Developments in the Early Twentieth Century. Skyrms B, editor. Cambridge: Cambridge University Press; 2002. 262 p.

- Senn SJ. Dicing with Death. Cambridge: Cambridge University Press; 2003.

- Spiegelhalter DJ, Freedman LS, Parmar MKB. Bayesian Approaches to Randomized Trials. Journal of the Royal Statistical Society Series a-Statistics in Society. 1994;157:357-87.

- Akaike H. Information theory and an extension of the maximum likelihood principle. In: Petrov BN, Czáki F, editors. Second International Symposium on Information Theory. Budapest: Akademiai Kiadó; 1973. p. 267-81.

- Murtaugh PA. In defense of P values. Ecology. 2014;95(3):611-7.

- Berger, J. O. and Sellke, T. (1987). “Testing a point null hypothesis: The irreconcilability of p values and evidence,” (with discussion). J. Amer. Statist. Assoc. 82: 112–139.

- Colquhoun D. An investigation of the false discovery rate and the misinterpretation of p-values. Royal Society Open Science. 2014;1(3):140216.

Also Relevant

- Cassella G. and Berger, R.. (1987). “Reconciling Bayesian and Frequentist Evidence in the One-sided Testing Problem,” (with discussion). J. Amer. Statist. Assoc. 82 106–111, 123–139.

*This post follows Senn’s previous guest post here. It was first blogged last May here. (Check the comments to that post, please.) It’s 4th [4] in my “Let PBP” series. Please comment, if you wish.

Among noteworthy points in this excellent post by Senn is the recognition that “in the Jeffreys formulation the value under the null is infinitely more likely than any other value. This fact is partly hidden by the approach. First make H0 & H1 equally likely. Then make every value under equally likely. The net result is that all values of θ are far from being equally likely”. Yet, this is the move we see, not only in critics of significance tests (of the sort Senn is discussing here), but in some popular current “reforms” of tests.[i] Also crucial is Senn’s remark about age and weight:

The error that is made is not only to assume that P-values should be what they are not but that when one tries to interpret them in the way that one should not, the previous calibration survives.

It is as if in giving recommendations in dosing children one abandoned a formula based on age and adopted one based on weight but insisted on using the same number of kg one had used for years

[i]I argue that they actually license a stronger inference than would be countenanced by significance testers–namely, to an alternative against which the test has high power.

Related Posts

P-values overstate the evidence?

P-values versus posteriors (comedy hour)

Spanos: Recurring controversies about P-values and confidence intervals (paper in relation to Murtaugh)

Stephen Senn: I have twice posted the Michelson data on the speed of light,

880,880,880,860,720,720,620,860,970,950,880,910,850,870,840,840,850,840,840,840

and asked for a P-value for the accepted modern value is 734.5, H_0:c= 734.5. No offers. Instead of 734.5 let us take a less extreme value for the point of illustration, say 800. If one now calculates the P-value in a standard method based on the t-distribution 2(1-pt(sqrt(n)*abs(mean(x)-mu_0)/sd(x),n-1)) then the P-value is about 0.02. This may well be rejected on the grounds that the data look nothing like a Gaussian sample of size 20. Instead of basing the decision of non-normality on the waving of arms combined with a verbal expression of rejection we give some thought as to how to make this decision precise. This has two advantages. Firstly we lay are cards open, that is everyone can criticize our method. Secondly, it produces a measure of the non-normality. What does a sample of size n of i.i.d. N(mu,sigma^2) look like? (1) the value of sqrt(n)abs(mean(x)-mu)/sigma <= qnorm((1+alpha)/2) with probability alpha. (2) qchisq((1-alpha)/2,n) <= sum((x_i-mu)^2)/sigma^2 <=qchisq((1+alpha)/2,n) with probability alpha, (3) d_{ku}(P_n,N(mu,sigma^2))<q_{ku}(alpha,n) with probability alpha and (4) max(abs(x_i-mu))/sigma <qnorm((1+alpha^(1/n))/2) also with probability alpha. We check the mean of the sample against mu, the variance against sigma^2, the distribution function of the sample against the N(mu,sigma^2) and the absence of outliers. If we replace alpha by (3+alpha)/4 then all these inequalities will hold simultaneously with probability at least alpha for a sample which is indeed N(mu,sigma^2). Here is an R programme which does exactly this.

fpval<-function(x,alpha,ngrid=20){

n<-length(x)

x<-sort(x)

beta<-(3+alpha)/4

q1<-qnorm((1+beta)/2)

q21<-qchisq((1-beta)/2,n)

q22<-qchisq((1+beta)/2,n)

q3<-qnorm((1+beta^(1/n))/2)

q4<-qkuip(beta)

sigu<-sqrt((n-1)*sd(x)^2/(q21-q1^2/n))

sigl<-sqrt((n-1)*sd(x)^2/q22)

muu<-mean(x)+q1*sigu/sqrt(n)

mul<-mean(x)-q1*sigu/sqrt(n)

theta<-double(2*(ngrid+1)^2)

dim(theta)<-c((ngrid+1)^2,2)

ic<-0

for(j in 0:ngrid){

ic1<-0

ic2<-0

ic3<-0

ic4<-0

sig<-sigl*exp(j*log(sigu/sigl)/ngrid)

for(i in (-ngrid/2):(ngrid/2)){

mu<-mean(x)+2*i*q1*sig/(ngrid*sqrt(n))

yq1+0.0001){ic1<-1}

sy2q22)|(sy2<q21)){ic2q3){ic3<-1}

dkq4){ic4<-1}

if(ic1+ic2+ic3+ic4==0){

ic<-ic+1

theta[ic,1]<-mu

theta[ic,2]0){

theta<-theta[1:ic,]

plot(theta)

}

else{theta<-NaN

}

list(theta)

}

Thus given alpha the programme will calculate all those (mu,sigma) for which the data `look like' a N(mu,sigma^2) sample in the sense made precise. This is called the alpha-approximation region. If the data are really N(mu,sigma^2) then with probability alpha (mu,sigma) will be include in the list of acceptable parameter values. At first glance it looks as though the programme does no more than calculate a somewhat non-standard confidence region. One can define a P-value as 1-beta where beta is the largest value of beta such that the 1-beta confidence interval for mu contains mu_0. If we transfer this to the idea of approximation the P-value is 1-beta for the largest value of beta such that the 1-beta approximation region contains (mu_0,sigma) for some sigma. If we do this for the Michelson data and mu_0=800 the P-value is 1.9e-05 in contrast to the 0.02. Thus the P-value is a measure of how well the data can be approximated by the N(mu_0,sigma^2) distribution for some sigma.

Jeffreys has criticized P-values as follows:

…. gives the probability of departures, measured in a particular way, equal to or greater than the observed set, and the contribution from the actual value is nearly always negligible. What the use of $P$ implies, therefore, is that a hypothesis that may be true may be rejected because it has not predicted observable results that have not occurred. This seems to be a remarkable procedure. On the face of it,

the evidence might more reasonably be taken as evidence for the hypothesis, not against it.

The alpha-approximation region can be seen as those (mu,sigma) values for which the following predictions are correct: I predict that the inequalities (1)-(4) will all hold and my prediction will be correct with probability at least alpha. The larger alpha the less precise my prediction. Thus a P-value measure how weak my predictions must be in order to be successful for mu_0. Thus if a hypothesis is rejected it is rejected because an event predicted not to occur did occur. This is the opposite of Jeffreys's point of view.

A fundamental problem with the idea of a prior formalising belief is that parsimony and regularisation are desirable for reasons other than belief in the truth of simplicity. If that’s accepted and Bayesians still want to use priors for achieving parsimony and regularisation, it has to be acknowledged that priors encode something else than belief.

(I suspect something like this holds for Jaynesian “information”; he has written on it and I have read it but I can’t access it right now to refresh my memory.)

Christian: How then would you describe the goal of regularization?

Regularisation brings stability and (usually) better predictive power regardless of whether the truth is parsimonious or not.

Why, if it’s not based on warrant or evidence?

Sometimes (Bayesian) regularisation means “biasing” methods away from complexity by prior design. Often, if the true model is indeed complex but in such a way that telling it apart from something much simpler requires truck loads of observations, regularised predictions are better and regularised estimators are more stable unless the number of observations is huge. So (Bayesian or non-Bayesian) regularisation brings benefits in these situations despite the fact that what’s encoded in the prior doesn’t match the truth.

Regarding evidence or warrant, I’d say this is about identifiability. This is about situations in which there’s not enough evidence to be found, due to lack of data (not necessarily because the amount of data is really small but rather because far too much would be required), in order to nail down the complexity in case it exists. So one can do better blocking complexity, whether it is really there or not.

Regularisation has usually to do with the inability to tell apart what really is the case from something “statistically pathological”.

Not sure what you mean by “statistically pathological” here––too complex to be represented with a simple stat model?

This could mean various things. Gaussian mixture models for example have their likelihood going to infinity if a mixture component mean is fixed at any single observation and the component variance is allowed to go to zero; generally there are “spurious” local maxima of the likelihood with mixture components with few observations and low variance. In multiple regression with many variables collinearity or near collinearity doesn’t allow to estimate all parameters with some precision, so dimension reduction is needed not because we “believe” that the dimension is indeed low but in order to have a model with identifiable parameters.

Writing down the model is not a problem but estimating it can’t work because either huge amounts of observations are needed to estimate the parameters or they cannot be estimated at all because of identifiability issues.

It is noteworthy that the false positive rate is close to the P-value in Senn’s chart, for all cases without biased spike priors.

Pingback: Statistics is in a sad sorry state | Bayesian Philosophy