Stephen Senn

Competence Centre for Methodology and Statistics

CRP Santé

Strassen, Luxembourg

George Barnard has had an important influence on the way I think about statistics. It was hearing him lecture in Aberdeen (I think) in the early 1980s (I think) on certain problems associated with Neyman confidence intervals that woke me to the problem of conditioning. Later as a result of a lecture he gave to the International Society of Clinical Biostatistics meeting in Innsbruck in 1988 we began a correspondence that carried on at irregular intervals until 2000. I continue to have reasons to be grateful for the patience an important and senior theoretical statistician showed to a junior and obscure applied one.

One of the things Barnard was adamant about was that you had to look at statistical problems with various spectacles. This is what I propose to do here, taking as an example meta-analysis. Suppose that it is the case that a meta-analyst is faced with a number of trials in a given field and that these trials have been carried out sequentially. In fact, to make the problem both simpler and more acute, suppose that no stopping rule adjustments have been made. Suppose, unrealistically, that each trial has identical planned maximum size but that a single interim analysis is carried out after a fraction f of information has been collected. For simplicity we suppose this fraction f to be the same for every trial. The questions is ‘should the meta-analyst ignore the stopping rule employed’? The answer is ‘yes’ or ‘no’ depending on how (s)he combines the information and, interestingly, this is not a question of whether the meta-analyst is Bayesian or not.

To make things concrete, suppose that the true treatment effect is 1, the standard error of the estimated treatment effect, assumed Normally distributed, is 1 at conclusion , the naïve type one error rate is 5% one-sided and the fraction at which one will look is 50% and the trials are large (to avoid having to worry about nuisance parameters). If that is the case then just over 17% of trials will stop early and just under 83% will run to conclusion. We assume stopping for large (positive) ‘significant’ values. The mean value of stopped trials will be about 3.09 and the mean value of trials that continue will be about 0.78. Note that, as is obvious, the mean value of stopped trials will be larger than the true value (3.09 is greater than 1). What is slightly less obvious is that the value of continued, or ‘full’, trials will be less than the true value (0.78 is less than 1).

Now suppose that we have 100 such trials, 17 of which stopped early and 83 of which carried on to conclusion. If we calculate the average result from such trials, we will have a statistic with an expected value of (17 x 3.09 + 83 x 0.78)/100 = 1.17. This is bigger than 1 so clearly we have a biased statistic and, given that we know that trials that are stopped early will exaggerate the treatment effect, this is perhaps not surprising.

However, this is not the way that meta-analysts usually proceed. Each concluded trial has twice the information of a stopped trial and larger trials are usually weighted more. If we reflect the weighting we now need to give concluded trials twice the weight of stopped ones and calculate so now we have an unbiased statistic.

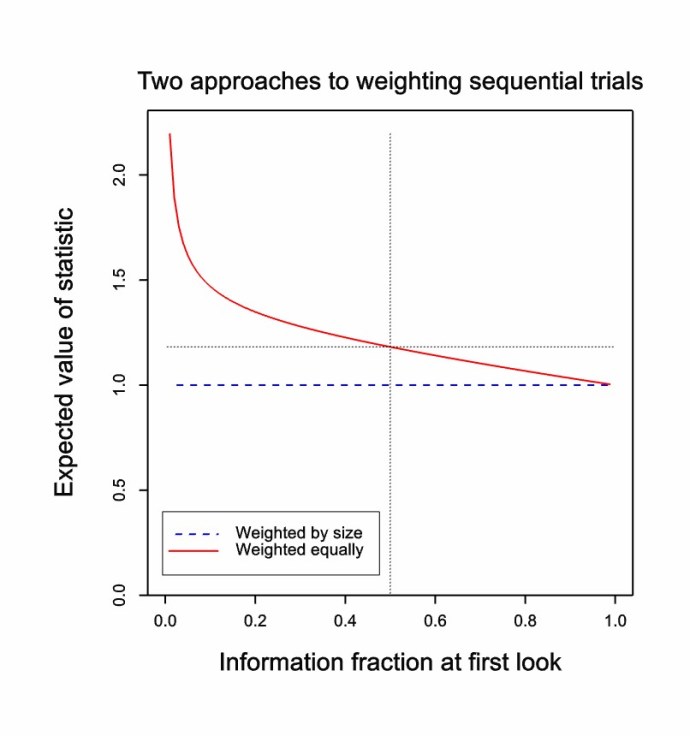

In fact the figure below shows the expected value (for the above example) plotted against an information fraction varying from 0.01 to 0.99. We can see that for the case where stopped and ‘full’ trials are weighted equally there is considerable bias, especially for small values of the information fraction. However for the case where we weight by the size of the trial value, the result is always 1, which is the true expected value. (The particular value of ½ for the information fraction considered in the eaxmple is picked out by dotted lines in the figure.)

Why does this happen? Return to the case of the information fraction of ½ and consider the ‘full’ trials that run to completion. We can consider them in two halves. The first half of every such trial has an expected value of 0.56. This is less than 1 because those higher than average values that would lead to stopping are removed from consideration. If we weight the 83 halves of the continued trials with the 17 stopped trials we get and a little thought shows that this is quite unsurprising. However these first portions of the continued trial are only half of such trials so such full trials have to be given overall twice the weight of the stopped trials in order that that portion of the full trials that compensates for early stopping is given appropriate weight.

Why does this happen? Return to the case of the information fraction of ½ and consider the ‘full’ trials that run to completion. We can consider them in two halves. The first half of every such trial has an expected value of 0.56. This is less than 1 because those higher than average values that would lead to stopping are removed from consideration. If we weight the 83 halves of the continued trials with the 17 stopped trials we get and a little thought shows that this is quite unsurprising. However these first portions of the continued trial are only half of such trials so such full trials have to be given overall twice the weight of the stopped trials in order that that portion of the full trials that compensates for early stopping is given appropriate weight.

In other words, even if we believe that adjustment is appropriate for sequential experiments where a single experiment leads to a decision that has to be made, we should not necessarily continue to believe that adjustment is appropriate for a meta-analyst weighing up evidence. One could argue that the trials should be weighted equally because each is a random realisation of the same experimental set-up. This would be to weight them by the expected information rather than the actual information. I think, however, that most statisticians would consider this was a bad idea and it would be rather perverse to insist on a weighting scheme that would then require us to make adjustments when an alternative exists that does not require us to do so.

Whatever your point of view, I hope that you agree that George Barnard’s advice to look at things with different spectacles deepens our understanding of what is going on.

Stephen: Thanks so much for this post. I’m sharing a remark and a query that unfortunately do not grapple with your specific example at all, sorry–traveling (hopefully others will jump in). It seems to me that in order for “looking at things with different spectacles” to deepen “our understanding of what is going on” we require a meta-level perspective or standpoint for scrutiny. On the face of it, if it is “rather perverse” to make adjustments, I am guessing it cannot be simply because “an alternative exists that does not require us to do so”. There would have to be a reason based on relevant evidence. Anyway, I apologize for not being able to reflect directly on the example. But before I forget, at some time I’d be curious to know about the “problems associated with Neyman confidence intervals that woke me to the problem of conditioning”. Were the problems akin to examples, 7 or 8, pp. 296-7. Cox D. R. and Mayo. D. (2010). “Objectivity and Conditionality in Frequentist Inference”? (I tried to paste a link).

Deborah: Perhaps I was too glib to say it was rather perverse. I had in mind an example of Don Berry’s in which he considers two researchers who run the same trial which, at each time point, gives exactly the same result, One of them, however, decided to look half way through. As it turns out the trial does not stop and continues to completion. However some alpha has been spent and she now discovers that although her colleague can claim significance she, with exactly the same results, cannot.

Funnily enough I think that any Bayesian ought to expect the two cases to be handled differently, since there is no explanation as to why the two researchers behaved differently unless they had different prior distributions or untilities. However, from one point of view the case is disturbing and so it is a ‘relief’ I think to find that there is a framework in which the results could be treated identically. This, by the way, does not mean that I think that this is the only framework in which such a result should be considered.

As regards George Barnard’s confidence interval example I shall have to do some digging and see what I can turn up. If I made any notes at the time I did not keep them.