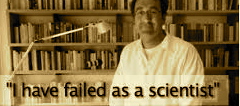

Would you buy a used car from this man? Probably not, but he thinks you might like to hire him as your chauffeur and brilliant conversationalist. I’m not kidding: fraudster Diederik Stapel is now offering what he calls ‘mind rides’ (see ad below video). He is prepared “to listen to what you have to say or talk to you about what fascinates, surprises or angers you”. He is already giving pedagogical talks on a train. This from Retraction Watch:

Would you buy a used car from this man? Probably not, but he thinks you might like to hire him as your chauffeur and brilliant conversationalist. I’m not kidding: fraudster Diederik Stapel is now offering what he calls ‘mind rides’ (see ad below video). He is prepared “to listen to what you have to say or talk to you about what fascinates, surprises or angers you”. He is already giving pedagogical talks on a train. This from Retraction Watch:

Diederik Stapel, the social psychologist who has now retracted 54 papers, recently spoke as part of the TEDx Braintrain, which took place on a trip from Maastricht to Amsterdam. Among other things, he says he lost his moral compass, but that it’s back.

Here’s a rough translation of the chauffeur ad from Stapel’s website (source is this blog):

Always on the move, from A to B, hurried, no time for reflection, for distance, for perspective. […] Diederik offers himself as your driver and conversation partner who won’t just get you from A to B, but who would also like to add meaning and disruption to your travel time. He will […] listen to what you have to say or talk to you about what fascinates, surprises or angers you. [Slightly paraphrased for brevity—Branko]

I don’t think I’d pay to have a Stapel “disruption” added to my travel time, would you? He sounds so much as he does in “Ontsporing”[i],

[i]The following is from a review of his Ontsporing [“derailed”].

“Ontsporing provides the first glimpses of how, why, and where Stapel began. It details the first small steps that led to Stapel’s deception and highlights the fine line between research fact and fraud:

‘I was alone in my fancy office at University of Groningen.… I opened the file that contained research data I had entered and changed an unexpected 2 into a 4.… I looked at the door. It was closed.… I looked at the matrix with data and clicked my mouse to execute the relevant statistical analyses. When I saw the new results, the world had returned to being logical’. (p. 145) Continue reading