Here’s an article by Nick Thieme on the same theme as my last blogpost. Thieme, who is Slate’s 2017 AAAS Mass Media Fellow, is the first person to interview me on p-values who (a) was prepared to think through the issue for himself (or herself), and (b) included more than a tiny fragment of my side of the exchange.[i]. Please share your comments.

Will Lowering P-Value Thresholds Help Fix Science? P-values are already all over the map, and they’re also not exactly the problem.

By Nick Thieme (for Slate Magazine)

Illustration by Slate

Last week a team of 72 scientists released the preprint of an article attempting to address one aspect of the reproducibility crisis, the crisis of conscience in which scientists are increasingly skeptical about the rigor of our current methods of conducting scientific research.

Their suggestion? Change the threshold for what is considered statistically significant. The team, led by Daniel Benjamin, a behavioral economist from the University of Southern California, is advocating that the “probability value” (p-value) threshold for statistical significance be lowered from the current standard of 0.05 to a much stricter threshold of 0.005.

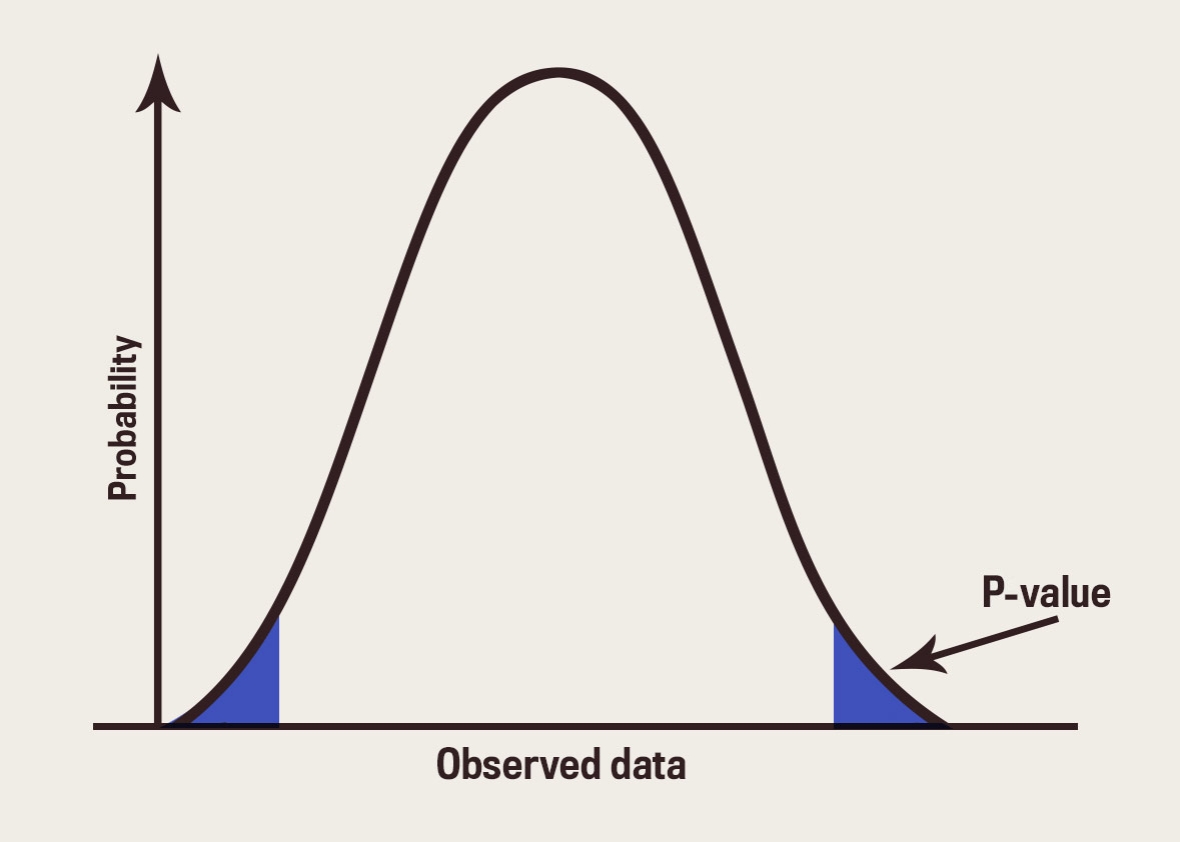

P-values are tricky business, but here’s the basics on how they work: Let’s say I’m conducting a drug trial, and I want to know if people who take drug A are more likely to go deaf than if they take drug B. I’ll state that my hypothesis is “drugs A and B are equally likely to make someone go deaf,” administer the drugs, and collect the data. The data will show me the number of people who went deaf on drugs A and B, and the p-value will give me an indication of how likely it is that the difference in deafness was due to random chance rather than the drugs. If the p-value is lower than 0.05, it means that the chance this happened randomly is very small—it’s a 5 percent chance of happening, meaning it would only occur 1 out of 20 times if there wasn’t a difference between the drugs. If the threshold is lowered to 0.005 for something to be considered significant, it would mean that the chances of it happening without a meaningful difference between the treatments would be just 1 in 200.

On its face, this doesn’t seem like a bad idea. If this change requires scientists to have more robust evidence before they can come to conclusions, it’s easy to think it’s a step in the right direction. But one of the issues at the heart of making this change is that it seems to assume there’s currently a consensus around how p-value ought to be used and this consensus could just be tweaked to be stronger.

P-value use already varies by scientific field and by journal policies within those fields. Several journals in epidemiology, where the stakes of bad science are perhaps higher than in, say, psychology (if they mess up, people die), have discouraged the use of p-values for years. And even psychology journals are following suit: In 2015, Basic and Applied Social Psychology, a journal that has been accused of bad statistical (and experimental) practice, banned the use of p-values. Many other journals, including PLOS Medicine and Journal of Allergy and Clinical Immunology, actively discourage the use of p-values and significance testing already.

On the other hand, the New England Journal of Medicine, one of the most respected journals in that field, codes the 0.05 threshold for significance into its author guidelines, saying “significant differences between or among groups (i.e P<.05) should be identified in a table.” That may not be an explicit instruction to treat p-values less than 0.05 as significant, but an author could be forgiven for reading it that way. Other journals, like the Journal of Neuroscience and the Journal of Urology, do the same.

Another group of journals—including Science, Nature, and Cell—avoid giving specific advice on exactly how to use p-values; rather, they caution against common mistakes and emphasize the importance of scientific assumptions, trusting the authors to respect the nuance of any statistics tools. Deborah Mayo, award-wining philosopher of statistics and professor at Virginia Tech, thinks this approach to statistical significance, where various fields have different standards, is the most appropriate. Strict cutoffs, regardless of where they fall, are generally bad science.

Mayo was skeptical that it would have the kind of widespread benefit the authors assumed. Their assessment suggested tightening the threshold would reduce the rate of false positives—results that look true but aren’t—by a factor of two. But she questioned the assumption they had used to assess the reduction of false positives—that only 1 in 10 hypotheses a scientist tests is true. (Mayo said that if that were true, perhaps researchers should spend more time on their hypotheses.)

But more broadly, she was skeptical of the idea that lowering the informal p-value threshold will help fix the problem, because she’s doubtful such a move will address “what almost everyone knows is the real cause of nonreproducibility”: the cherry-picking of subjects, testing hypothesis after hypothesis until one of them is proven correct, and selective reporting of results and methodology.

There are plenty of other ways that scientists are testing to help address the replication crisis. There’s the move toward pre-registration of studies before analyzing data, in order to avoid fishing for significance. Researchers are also now encouraged to make data and code public so a third party can rerun analyses efficiently and check for discrepancies. More negative results are being published. And, perhaps most importantly, researchers are actually conducting studies to replicate research that has already been published. Tightening standards around p-values might help, but the debate about reproducibility is more than just a referendum on the p-value. The solution will need to be more than that as well.

[i] We did not discuss that recent test ban(“Don’t ask don’t tell”). If we had, I might have pointed him to my post on “P-value madness”.

Link to Nick Thieme’s Slate article: “Will Lowering P-Value Thresholds Help Fix Science? P-values are already all over the map, and they’re also not exactly the problem.”

Deborah:

Think of three ways of evaluating evidence:

no p-value, p-value, multiplicity and modeling adjusted p-value.

Epi now uses unadjusted p-values _knowing full well they ask lots of questions_. They could move to stronger p-values, say 0.005, and have them adjusted for multiple testing. But that would destroy their very successful “science” business model.

At some point it would be useful to bring Boos and Stefanski 2011 The American Statistician thinking into the discussion. They suggested 0.05 => 0.001. Note the FDA rule of 2 0.05 studies (2 of k actually) is in the direction of a smaller p-value.

Stan

I can’t let this comment go without reply. There is no FDA rule of 2 0.05 studies out of k, Despite the recent publication by Ravenzwaaij and Ioannidis in which the idea of this requirement is suggested, it is not the case that is a regulatory rule. I remember in the late 90’s speaking to the marketing organisation of a large pharma company in New York during which this idea was proposed – do as many studies until you 2 +ve studies and marketing authorization would follow and telling them at the time that they would not be successful following such a strategy. Ultimately it is the weight of evidence that matters and if there are k-2 negative studies sponsors better have better argument than “look we have 2 +ves and that is all your rule requires”. They obviously hadn’t read Fisher:

“In order to assert that a natural phenomenon is experimentally demonstrable we need, not an isolated record, but a reliable method of procedure. In relation to the test of significance, we may say that a phenomenon is experimentally demonstrable when we know how to conduct an experiment which will rarely fail to give us a statistically significant result.“ The Design of Experiments, 1947.

If k is large 2 out of k is not “rarely fail”

van Ravenzwaaij, D. and J.P. Ioannidis, A simulation study of the strength of evidence in the recommendation of medications based on two trials with statistically significant results. PLoS One, 2017. 12(3): p. e0173184.in which th

Andy. Thank you for this. I totally agree with you and am glad you quote Fisher (w/my favorite remark). It’s true that determining when “we know how to” is somewhat vague (do you agree?) but that shouldn’t stop people from grasping the intent sufficiently to at least ascertain when this clearly has not been shown.

If it wasn’t vague, it wouldn’t be Fisher!

Andy:

> “look we have 2 +ves and that is all your rule requires”. They obviously hadn’t read Fisher:

The also have no knowledge of how regulatory agencies work.

Its not that they would never get away with such a strategy in pre-market studies, because people do the work at regulatory agencies and sometimes people really screw up.

I doubt if anything this blatant has ever been overlooked if the negative studies are not _illegally_ hidden. That apparently can happen with negative studies done in some third world countries but positive studies from the same countries should set off alarms given that were not preregistered.

Keith O’Rourke

Passages like this are what make me pessimistic about the prospect of achieving widespread understanding of what the p-value quantifies:

“The data will show me the number of people who went deaf on drugs A and B, and the p-value will give me an indication of how likely it is that the difference in deafness was due to random chance rather than the drugs. If the p-value is lower than 0.05, it means that the chance this happened randomly is very small—it’s a 5 percent chance of happening, meaning it would only occur 1 out of 20 times if there wasn’t a difference between the drugs. If the threshold is lowered to 0.005 for something to be considered significant, it would mean that the chances of it happening without a meaningful difference between the treatments would be just 1 in 200.”

Here we have two statements treating the p-value as P(H0|data), followed by two statements correctly treating it as P(data|H0). The author clearly thinks they are equivalent. And he’s presumably been actively researching the topic.

This is a problem that worries me greatly – it seems that most people who use and interpret p-values think that they are calculating “the probability that my hypothesis is wrong”, which leads them to overconfidence in the informative value of any statistically significant results that they do get.

I certainly agree that multiple testing (which is sometimes explicit and sometimes implicit) and biased selection are the chief problems that lead to non-reproducible results getting published. But I think the ubiquitous misinterpretation of the p-value as some variant of “the probability I’m wrong” plays a role too, in that it makes people less cautious than they should be in interpreting significant results that are produced even in the absence of clear selection and multiple testing effects.

Ben: I don’t know if it will make you less or even more pessimistic to hear what I have to say about the phrase “the probability our statistically significant results are due to chance” (e.g., https://errorstatistics.com/2013/11/27/probability-that-it-be-a-statistical-fluke-ii/)

I do not endorse Thieme’s precise phrasing, but deny he’s asserting a posterior probability in Ho.

“Pr(test T produces stat sig results< a; computed under the assumption that Ho adequately describes the data generating procedure) ≤ a"

is a valid error probabilistic claim. The missing premise is the one Andy Grieve happens to give us, from Fisher. We then infer from knowing how to bring about alpha-significant results that we've evidence of "a genuine experimental phenomenon".

You might think the error probabilistic claim is convoluted and not what we want, and I say (a) it’s the most natural way in which humans regularly reason in the face of error prone inferences and (b) no posterior probabilism available computes a number that represents how well tested or warranted scientific claims are. The deductive theory of probability is apt for assigning probs to events given priors, but statistical inference is inductive–it goes beyond the premises. It’s the supposition that probabilism is wanted that is behind the misinterpretations. What has it given us? (i) default posteriors based on (one or another) default prior that is not supposed to represent beliefs or even be probs, but makes the data dominant in some sense, or (b) degree of belief posteriors, or (c) diagnostic screening error rates considered over urns of test hypotheses. I don’t say there’s no role for these, but I deny they’re what’s wanted in a normative assessment of evidence, i.e., an assessment of what’s warranted to infer from data.

Then why not demand that he append the following after “If the p-value is lower than 0.05, it means that the chance this happened randomly is very small”:

“if and only if my model of how the world works is true; and I’ve no evidence (especially given these results) that it is.”

Is there a reason why claims of knowledge are more warranted when explications of models/hypotheses follow, rather than precede, experiments?

I’m a big fan of Popper and his claim that people work hard (often subconsciously) to manufacture ad hoc explanations for the unexpected. But maybe I’ve not been paying attention.

Mayo, thank you for the link to your previous post. I agree very much that Fisher’s advice makes it clear how we should be reasoning with p-values. I also think that the casual use of “the probability these results were do to chance” muddies things in a way that lead to people incorrectly thinking that their p-values are posterior probabilities.

I also agree that “It’s the supposition that probabilism is wanted that is behind the misinterpretations.” I don’t think that many people who use p-values but do not have expertise in statistics or philosophy of science realize this. I’ll admit that this belief of mine about the understandings of others comes from my subjective experiences which are influenced by selection bias. When I discuss the use of p-values with students or friends or collaborators, I find them frequently making probabilistic claims about their hypotheses. They do believe that p = 0.03 means there is a 97% chance that their hypothesis is correct. They are looking for P(H|data), and they don’t know that this is not on offer. And so I take statements like “the probability our statistically significant results are due to chance is 0.03” as indicative of a confusion regarding how inductive frequentist inference works in the first place, rather than as a loose restatement of P(T(X)>T(x)|Ho) < 0.03. But maybe I should be more generous.

On further reflection, I should qualify my claim that:

‘that the casual use of “the probability these results were do[sic] to chance” muddies things in a way that lead to people incorrectly thinking that their p-values are posterior probabilities.’

I think the causality goes both ways. I see this language as both leading to and resulting from confusion.

Ben: Interesting, thanks. Now if the person interpreting p = .03 were reporting the equivalent confidence interval, it would permit inferring H1 at confidence level .97 (I’m thinking a one-sided test for a mean). Now one way to understand a .97 confidence coefficient is that this particular interval was generated by a method that is correct 97% of the time. And some, like Fraser (confidence) and Fisher, when being fiducial, would say this entitles you to assign a .97 probability to the estimate–BUT only understood in the sense that the .97 is qualifying the method’s performance! (it also must be relevant)

The severe tester might allow the inference to have severity .97 (but a single isolated significant test would be insufficient for anything more than an indication).

so, you could say that when people assign the probability to the result they are only reflecting an intrinsic use of probability as attaching to the method.

Most people who’d like to have a probability that a certain hypothesis is wrong or false don’t know what this probability actually means, and they don’t even know that they don’t know this.

I meant of course “a probability that a certain hypothesis is true or false”.

By the way, ultimately I think that lowering the threshold just makes the business of fishing for significance it a bit harder, which is probably a not too bad thing, although I totally agree that not much good comes from fixed thresholds whatever they are.