Stephen Senn

Head of Competence Center for Methodology and Statistics (CCMS)

Luxembourg Institute of Health

Twitter @stephensenn

Automatic for the people? Not quite

What caught my eye was the estimable (in its non-statistical meaning) Richard Lehman tweeting about the equally estimable John Ioannidis. For those who don’t know them, the former is a veteran blogger who keeps a very cool and shrewd eye on the latest medical ‘breakthroughs’ and the latter a serial iconoclast of idols of scientific method. This is what Lehman wrote

Ioannidis hits 8 on the Richter scale: http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0173184 … Bayes factors consistently quantify strength of evidence, p is valueless.

Since Ioannidis works at Stanford, which is located in the San Francisco Bay Area, he has every right to be interested in earthquakes but on looking up the paper in question, a faint tremor is the best that I can afford it. I shall now try and explain why, but before I do, it is only fair that I acknowledge the very generous, prompt and extensive help I have been given to understand the paper[1] in question by its two authors Don van Ravenzwaaij and Ioannidis himself.

What van Ravenzwaaij and Ioannidis (R&I) have done is investigate the FDA’s famous two trials rule as a requirement for drug registration. To do this R&I simulated two-armed parallel group clinical trials according to the following combinations of scenarios (p4).

Thus, to sum up, our simulations varied along the following dimensions:

1. Effect size: small (0.2 SD), medium (0.5 SD), and zero (0 SD)

2. Number of total trials: 2, 3, 4, 5, and 20

3. Number of participants: 20, 50, 100, 500, and 1,000

The first setting defines the treatment effect in terms of common within-group standard deviations, the second defines the total number of trials submitted to the FDA with exactly two of them significant and the third the total number of patients per group.

They thus had 3 x 5 x 5 = 75 simulation settings in total. In each case the simulations were run until 500 cases arose for which two trials were significant. For each of these cases they calculated a one-sided Bayes factor and then proceeded to judge the FDA’s rule based on P-values according to the value the Bayes factor indicated.

In my opinion this is a hopeless mishmash of two systems: the first, (frequentist) conditional on the hypotheses and the second (Bayesian) conditional on the data. They cannot be mixed to any useful purpose in the way attempted and the result is not only irrelevant frequentist statistics but irrelevant Bayesian.

Before proceeding to discuss the inferential problems, however, I am going to level a further charge of irrelevance as regards the simulations. It is true that the ‘two trials rule’ is rather vague in that it is not clear how many trials one is allowed to run to get two significant ones. In my opinion it is reasonable to consider that the FDA might accept two out of three but it is frankly incredible that they would accept two out of twenty unless there were further supporting evidence. For example, if two large trials were significant but 18 smaller ones were not, but significant as a set in a meta-analysis, one could imagine the programme passing. Even this scenario, however, is most unlikely and I would be interested to know of any case of any sort in which the FDA has accepted a ‘two out of twenty’ registration.

Now let us turn to the mishmash. Let us look, first of all, at the set up in frequentist terms. The simplest common case to take is the ‘two out of two’ significant scenario. Sponsors going into phase III will typically perform calculations to target at least 80% power for the programme as a whole. Thus 90% power for individual trials is a common standard since the product of the powers is just over 80%. For the two effect sizes of 0.3 and 0.5 that R&I consider this would, according to nQuery®, yield 527 and 86 patients per arm respectively. The overall power of the programme would be 81% and the joint two-sided type I error rate would be 2 x (1/40)2 = 1/800, reflecting the fact that each of two two-sided tests would have to be significant at the 5% level but in the right direction.

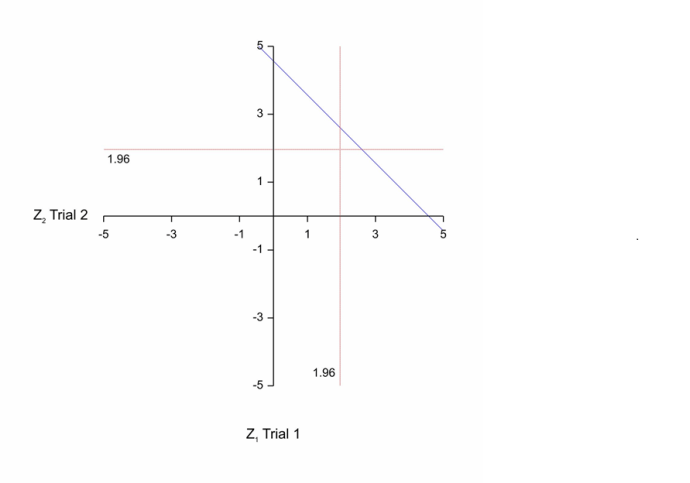

Now, of course, these are planned characteristics in advance of running a trial. In practice you will get a result and then, in the spirit of what R&I are attempting, it would be of interest to consider the least impressive result that would just give you registration. This of, course, is P=0.05 for each of the two trials. At this point, by the way, I note that a standard frequentist objection can be entered to the two trials rule. If the designs of two trials are identical, then given that they are of the same size, the sufficient statistic is simply the average of the two results. If conducted simultaneously there would be no reason not to use this. This leads to a critical region for a more powerful test based on the average result from the two providing a 1/1600 type I error rate (one-sided) illustrated in the figure below that is to the right and above the blue diagonal line. The corresponding region for the two-trials rule is to the right of the vertical red line and above the horizontal one. The just ‘significant’ value for the two-trials rule has a standardised z-score of 1.96 x √2 = 277, whereas the rule based on the average from the two trials would have a z-score of 3.02. In other words, evidentially, the value according to the two-trials rule is less impressive[2].

Now, the Bayesian will argue, that the frequentist is controlling the behaviour of the procedure if one of two possible realities out of a whole range applies but has given no prior thought to their likely occurrence or, for that matter, to the occurrence of other values. If, for example, moderate effects sizes are very unlikely, but it is quite plausible that the treatment has no effect at all and the trials are very large, then even though their satisfying the two trials rule would be a priori unlikely, if it was only minimally satisfied, it might actually imply that the null hypothesis was likely true.

Now, the Bayesian will argue, that the frequentist is controlling the behaviour of the procedure if one of two possible realities out of a whole range applies but has given no prior thought to their likely occurrence or, for that matter, to the occurrence of other values. If, for example, moderate effects sizes are very unlikely, but it is quite plausible that the treatment has no effect at all and the trials are very large, then even though their satisfying the two trials rule would be a priori unlikely, if it was only minimally satisfied, it might actually imply that the null hypothesis was likely true.

A possible way for the Bayesian to assess the evidential value is to assume, just for argument’s sake, that the null hypothesis and the set of possible alternative hypotheses are equally likely a priori (the prior odds are one) and then calculate the posterior probability and hence odds given the observed data. The ratio of the posterior odds to the prior odds is known as the Bayes factor[3]. Citing a paper[4] by Rouder et al describing this approach R&I then use the BayesFactor package created by Morey and Rouder to calculate the Bayes factor corresponding to every case of two significant trials they generate.

Actually it is not the Bayes factor but a Bayes factor. As Morey and Rouder make admirably clear in a subsequent paper[5], what the Bayes factor turns out to be depends very much on how the probability is smeared over the range of the alternative hypothesis. This can perhaps be understood by looking at the ratios of likelihoods (relative to the value under the null) when P=0.05 for each of the two trials as a function of the true (unknown) effect size for the sample sizes of 527 and 86 that would give 90% power for the values of the effect sizes (0.2 and 0.5) that R&I consider. The logs of these (chosen to make plotting easier) are given in the figure below. The blue curve corresponds to the smaller effect size used in planning (0.2) and hence the larger sample size (527) and the red curve corresponds to the larger effect size (0.5) and hence the smaller sample size (86). Given the large number of degrees of freedom available, the Normal distribution likelihoods have been used. The values of the statistic that would be just significant at the 5% level (0.1207 and 0.2989) for the two cases are given by the vertical dashed lines and, since these are the values that we assume observed in the two cases, each curve reaches its respective maximum at the relevant value.

Wherever a value on the curve is positive, the ratio of likelihoods is greater than one and the posited value of the effect size is supported against the null. Wherever it is negative, the ratio is less than one and the null is supported. Thus, whether the posited values of the treatment effect that make up the alternative values are supported as a set or not depends on how you smear the prior probability. The Bayes factor is the ratio of the prior-weighted integral of the likelihoods. In this case the likelihood under the null is a constant so the conditional prior under the alternative is crucial. There is no automatic solution and careful choice is necessary. So what are you supposed to do? Well, as a Bayesian you are supposed to choose a prior distribution that reflects what you believe. At this point, I want to make it quite clear that if you think you can do it you should do so and I don’t want to argue against that. However, this is really hard and it has serious consequences[6]. Suppose that sample size of 527 has been used corresponding to the blue curve. Then any value of the effect size greater than 0 and less than about 2 x 0.1207 = 0.2414 has more support than the null hypothesis itself but any value more than 0.2414 is less supported than the null. How this pans out in your Bayes factor now depends on your prior distribution. If your prior maintains that all possible values of the effect size when the alternative hypothesis is true must be modest (say never greater than 0.2414), then they are all supported and so is the set. On the other hand, if you think that unless the null hypothesis is true, only values greater than 0.2414 are possible, then all such values are unsupported and so is the set. In general, the way the conditional prior smears the probability is crucial.

Wherever a value on the curve is positive, the ratio of likelihoods is greater than one and the posited value of the effect size is supported against the null. Wherever it is negative, the ratio is less than one and the null is supported. Thus, whether the posited values of the treatment effect that make up the alternative values are supported as a set or not depends on how you smear the prior probability. The Bayes factor is the ratio of the prior-weighted integral of the likelihoods. In this case the likelihood under the null is a constant so the conditional prior under the alternative is crucial. There is no automatic solution and careful choice is necessary. So what are you supposed to do? Well, as a Bayesian you are supposed to choose a prior distribution that reflects what you believe. At this point, I want to make it quite clear that if you think you can do it you should do so and I don’t want to argue against that. However, this is really hard and it has serious consequences[6]. Suppose that sample size of 527 has been used corresponding to the blue curve. Then any value of the effect size greater than 0 and less than about 2 x 0.1207 = 0.2414 has more support than the null hypothesis itself but any value more than 0.2414 is less supported than the null. How this pans out in your Bayes factor now depends on your prior distribution. If your prior maintains that all possible values of the effect size when the alternative hypothesis is true must be modest (say never greater than 0.2414), then they are all supported and so is the set. On the other hand, if you think that unless the null hypothesis is true, only values greater than 0.2414 are possible, then all such values are unsupported and so is the set. In general, the way the conditional prior smears the probability is crucial.

Be that as it may, I doubt that choosing, ‘a Cauchy distribution with a width of r = √2/2’ as R&I did would stand any serious scrutiny. Bear in mind that these are molecules that have passed a series of in vitro and in vivo pre-clinical screens as well as phase I, IIa and IIb before being put to the test in phase III. However, if R&I were serious about this, they would consider how well the distribution works as a prediction as to what actually happens in phase III and examine some data.

Instead, they assume, (as far as I can tell) that the Bayes factor they calculate in this way is some sort of automatic gold standard by which any other inferential statistic can and should be judged whether or not the distribution on which the Bayes factor is based on is reasonable. This is reflected in Richard Lehman’s tweet ‘Bayes factors consistently quantify strength of evidence’ which, in fact, however needs to be rephrased ‘Bayes factors coherently quantify strength of evidence for You if You have chosen coherent prior distributions to construct them.’ It’s a big if.

R&I then make a second mistake of simultaneously conditioning on a result and a hypothesis. Suppose their claim is correct that in each of the cases of two significant trials that they generate the FDA would register the drug without further consideration. Then, for the first two of the three cases ‘Effect size: small (0.2 SD), medium (0.5 SD), and zero (0 SD)’ the FDA has got it right and for the third it has got it wrong. By the same token wherever any decision based on the Bayes factor would disagree with the FDA it would be wrong in the first two cases and right in the third. However, this is completely useless information. It can’t help us decide between the two approaches. If we want to use true posited values of the effect size, we have to consider all possible outcomes for the two trial rule, not just the ones that indicate ‘register’. For the cases that indicate ‘register’, it is a foregone conclusion that we will have 100% success (in terms of decision-making) in the first two cases and 100% failure in the second. What we need to consider also is the situation where it is not the case that two trials are significant.

If, on the other hand R&I wish to look at this in Bayesian terms, then they have also picked this up the wrong way. If they are committed to their adopted prior distribution, then once they have calculated the Bayes factor there is no more to be said and if they simulate from the prior distribution they have adopted, then their decision making will, as judged by the simulation, turn out to be truly excellent. If they are not committed to the prior distribution, then they are faced with the sore puzzle that is Bayesian robustness. How far can the prior distribution from which one simulates be from the prior distribution one assumes for inference in order for the simulation to be a) a severe test but b) not totally irrelevant?

In short the R&I paper, in contradistinction to Richard Lehman’s claim, tells us nothing about the reasonableness of the FDA’s rule. That would require an analysis of data. Automatic for the people? Not quite. To be Bayesian, ‘to thine own self be true’. However, as I have put it previously, this is very hard and ‘You may believe you are a Bayesian but you are probably wrong’[7].

Acknowledgements

I am grateful to Don van Ravenzwaaij and John Ioannidis for helpful correspondence and to Andy Grieve for helpful comments. My research on inference for small populations is carried out in the framework of the IDEAL project http://www.ideal.rwth-aachen.de/ and supported by the European Union’s Seventh Framework Programme for research, technological development and demonstration under Grant Agreement no 602552.

References

- van Ravenzwaaij, D. and J.P. Ioannidis, A simulation study of the strength of evidence in the recommendation of medications based on two trials with statistically significant results. PLoS One, 2017. 12(3): p. e0173184.

- Senn, S.J., Statistical Issues in Drug Development. Statistics in Practice. 2007, Hoboken: Wiley. 498.

- O’Hagan, A., Bayes factors. Significance, 2006(4): p. 184-186.

- Rouder, J.N., et al., Bayesian t tests for accepting and rejecting the null hypothesis. Psychonomic bulletin & review, 2009. 16(2): p. 225-237.

- Morey, R.D., J.-W. Romeijn, and J.N. Rouder, The philosophy of Bayes factors and the quantification of statistical evidence. Journal of Mathematical Psychology, 2016. 72: p. 6-18.

- Grieve, A.P., Discussion of Piegorsch and Gladen (1986). Technometrics, 1987. 29(4): p. 504-505.

- Senn, S.J., You may believe you are a Bayesian but you are probably wrong. Rationality, Markets and Morals, 2011. 2: p. 48-66.

I very much enjoyed this post. Thanks.

Stephen:Thank you so much for your post! The future of scientific integrity rests upon the few who, like you, have the knowledge and courage to counter some of the latest fads and quite confused, if well-meaning, arguments against methods capable of error control.

What’s really “off the Richter scale” is the extent to which some of the leaders in the new “methodological activism” embrace the echo chamber of uncritical acceptance of methods that are flexible enough to find evidence in favor of what you already believe, rather than be self critical about beliefs. Ioannidis may be on the side of the angels,but he leaves many readers having to guess if any given paper is one of his important and valid critiques, or one that’s on shaky grounds about statistical concepts and methods.

I did, over many years, quiz many people on the 2 out of k question. And I suspect the FDA is not entirely consistent. When I was in industry a single very large trial was submitted, and the FDA insisted on a 2nd trial. The original trial was divided at random many times, as I remember it, always getting two significant trials. The company lost the argument.

I was told, on some authority, k could be whatever you like. I doubt anyone went for a large k due to cost. In light of Boos and Setfanski paper on the repeatability of p-values, some latitude seems essential to have any compound approved. Or you can increase n in each trial.

You can always change the formulation, and indeed the new formulation might be better.

Thanks, Stan. I doubt, despite what you were told that the FDA would accept 2 out of large k if say the other trials trended in the wrong direction

A number of people have suggested possible alternatives to the two trials rule. Here are some references to what I have called the pooled trial rule.

1) Senn, S.J., Statistical Issues in Drug Development. Statistics in Practice, ed. V. Barnett. 1997 & 2007, Chichester: John Wiley. 405.

2) Fisher, L.D., One large, well-designed, multicenter study as an alternative to the usual FDA paradigm. Drug Information Journal, 1999. 33: p. 265-271.

3) Rosenkranz, G., Is it Possible to Claim Efficacy if One of Two Trials is Significant While the Other Just Shows a Trend? Drug Information Journal, 2002. 36: p. 875-879.

4) Darken, P.F. and S.-Y. Ho, A note on sample size savings with the use of a single well-controlled clinical trial to support the efficacy of a new drug. Pharmaceutical Statistics, 2004. 3: p. 61-63.

5) Shun, Z., et al., Statistical consideration of the strategy for demonstrating clinical evidence of effectiveness–one larger vs two smaller pivotal studies. Statistics in Medicine, 2005. 24(11): p. 1619-37; discussion 1639-56.

Having independent verification might have some value compared to meta-analytic type pooling but if all that the sponsor is going to do is provide an identical second trial, there does not seem to be much point.

The point, however, about a pooled trials approach is that any meta-analysis should be required to show ‘significance’ at a much more stringent level than 5%. I think that this point is regularly overlooked in conventional meta-analysis.

Perhaps as absolute power corrupts, absolute influence dissipates critical reflection and the ability to see criticism as potentially helpful.

I do recall suggesting to Stephen Goodman when he was pointing out the technical mistakes in Ioannidis’ Why Most Published Research Findings Are False in 2005/6 that “he is not a statistician and he is just doing back of the envelope calculations to get across an important message”.

At some point, technical mistakes do become more important than getting across an important message – especially if that message is mostly technical.

In the blogasphere there does seem to be the assumption that when statisticians are recruited into a regulatory agency they become docile and brain dead. This is dangerous http://www.reuters.com/article/us-science-europe-glyphosate-exclusive-idUSKBN17N1X3

I have worked in an FDA like capacity and though there are serious confidentiality requirements, I think I can say that even one out of three trials being non-significant would set off very loud alarm bells in most reviews. Not that it won’t be improved but the review will be more thorough and circumspect.

Of course there are statisticians who not that insightful and those who are not statisticians are at risk of unknowingly collaborating with one?

Keith O’Rourke

Phen:”Perhaps as absolute power corrupts, absolute influence dissipates critical reflection…

I do recall suggesting to Stephen Goodman when he was pointing out the technical mistakes in Ioannidis’ Why Most Published Research Findings Are False in 2005/6 that “he is not a statistician and he is just doing back of the envelope calculations to get across an important message”.

And the important message in the 2005 paper was: if you publish a “discovery” based on a single statistically significant result, with p-hacking and cherry-picking to boot, and further assume the hypothesis you chose to study was a random sample from an urn of null hypotheses, a large % of which are assumed to be true, then, if you use a computation from diagnostic screening (transposing the conditional), then it follows that the %(Ho|rejected nulls) > Pr(reject Ho;Ho) –the latter being the type I error probability.No argument is given as why the former quantity (which I call the false finding rate since false discovery rate was already defined differently) is relevant for assessing the evidence of no effect, nor why its complement, the so-called PPV being high is desirable for inferring good evidence of a genuine effect. The computation, moreover, lauds fields with high prevalence rates (even if due to crud factors) and casts doubt upon potentially stringent inferences in fields where “prevalence” is low or thought to be low. I worry as well that it has firmly fixed a confusion between the probability of a type I error and the “false finding” probability so that people erroneously suppose that high power means low type I error–instead of there being the usual trade-off.

The irony in Goodman’s criticism (the paper being with Greenland) is that Ioannidis’ computation follows the one Goodman uses to show P-values exaggerate evidence! Karma for them, confusion for most everyone else. I’m not meaning to blur that issue with the one in this paper (on which Senn is the expert).

Here’s a relevant post:

https://errorstatistics.com/2015/12/05/beware-of-questionable-front-page-articles-warning-you-to-beware-of-questionable-front-page-articles-2/

I see inference as being part of the general debate of science. So I don’t see things so black and white. We all have views that are wrong and I am no exception. I have to admit a) that my thinking has benefitted from arguing with Bayesians b) that in circumstances where data can help, I do find Bayesian arguments useful (although in such cases frequentist mixed models, often, but not always, give similar results). I am, however, very unenthusiastic about Bayes factors, which I classify as largely ‘Neither fish nor fowl’ although rather often a red herring.

Stephen: I don’t see things as black or white, and I think it’s right to see inference as being part of the general debate of science. I still don’t think it’s fair to say, well so and so is not a statistician, when they are in positions of great influence. Phan may be right that “absolute influence dissipates critical reflection” but what does that means for attempts to improve statistical understanding? I may have come out sounding a bit stronger than I intended (so I’ve amended my remark slightly),for which I place partial blame on horribly painful ear infections.

Stephen:

I believe you have made the problem with Bayes factors clear enough “alternative values are supported as a set or not depends on how you smear the prior probability” that many won’t need to collaborate with a statistician (some one who can adequately mathematical statistics) to grasp it.

I do think methods of scientific inquiry (which are not black and white) should set out how to evaluate statistics and not so much vice versa. But if issues such as the one above are not grasped, it can’t be done well.

All views are always wrong to some extent but we hope we can continually get less wrong.

Keith O’Rourke

Phan: I don’t know what you mean by saying: “I do think methods of scientific inquiry (which are not black and white) should set out how to evaluate statistics”. I’m not sure if this embodies a supposition that scientists can identify “methods of scientific inquiry” and should say, “so statistics should do this'” Are scientists good at articulating clearly what they do? what warrants what they do? Philosophers of science say no, and there’s nothing far-fetched about this. Conceptual clarity about methods–what’s needed for meta-methodology–isn’t generally something practitioners themselves are good at. There are important exceptions, of course.

Dear Stephen,

Thank you for your elaborate blogpost. You make some thought-provoking comments, we will attempt to address each of them in turn. In what follows, we will select relevant excerpts of your post (in quotation marks) with our response directly below them. We apologize in advance for the redundancy: largely we are summarizing here our previous comments that we had made during our private e-mail exchanges (that we greatly appreciated). Initially we thought it was not necessary to place these responses in the blog as well, but Deborah Mayo kindly invited us to write a response to your blogpost, so we were persuaded that others may benefit from seeing both views.

(1) The mishmash

“In my opinion this is a hopeless mishmash of two systems: the first, (frequentist) conditional on the hypotheses and the second (Bayesian) conditional on the data. They cannot be mixed to any useful purpose in the way attempted and the result is not only irrelevant frequentist statistics but irrelevant Bayesian.”

It is true that p-values condition on one (!) hypothesis. Bayes factors represent the ratio of marginal likelihoods, p(y|H1)/p(y|H0). Note that we do not advocate equating them in any way. We evaluate a common application of the FDA policy (which conditions on a point-null) and recommend a different way of evaluating the available evidence (the relative change in plausibility of both hypotheses after observing the data). In our opinion, p-values are of questionable value here (for one, they do not allow one to account for the null-trials in a meaningful way), whereas Bayes factors may present a rational way of taking into account all available evidence.

(2) The number of non-significant trials

“In my opinion it is reasonable to consider that the FDA might accept two out of three but it is frankly incredible that they would accept two out of twenty unless there were further supporting evidence.”

This partly depends on the form in which these trials (if at all) see the light of day. There are many variants of selective outcome and analyses reporting bias and industry and regulatory trials are certainly not immune to them all, even though these trials have probably improved over time. One of us (John) has written many papers about this, e.g. the latest in the BMJ earlier this year on monitoring outcomes reporting that builds on the COMPare experience. Even in the crème de la crème trials, selective reporting and manipulated outcomes are the rule rather than the exception.

(3) The Type I error rate

“If the designs of two trials are identical, then given that they are of the same size, the sufficient statistic is simply the average of the two results. If conducted simultaneously there would be no reason not to use this. This leads to a critical region for a more powerful test based on the average result from the two providing a 1/1600 type I error rate (one-sided) illustrated in the figure below that is to the right and above the blue diagonal line.”

We agree that in a vacuum, if “exactly two trials are run, and the null hypothesis is true, then the probability that both will be significant is 1/1600”. For this exact scenario, Bayes Factors lead to pretty similar (“erroneous”) conclusions as p-values (see top-left panel of Figs. 5 and 6 in our paper). Scenarios of 2 out of “more than 2” are more common under the event where the null hypothesis is true. Actual occurrence rates in practice will likely represent a false picture due to publication bias and selectively reported outcomes. It is in these scenarios that Bayes Factors often lead to different conclusions from the “2 p-values lower than .05” rule. Put simply, when more than 2 trials have been conducted and exactly 2 trials are statistically significant, we should consider all the evidence, not just the evidence of these two trials. Secondly, there is ample evidence from thousands of meta-analyses and clinical experience accumulated over decades that the true “failure rate” of FDA and other regulatory agencies (=”how many licensed drugs are not really that clinically useful”) is probably 100-1000 fold higher (6-60%) than the theoretical 1/1600 (0.6%). This is due to a constellation of multiple factors (selective reporting, data dredging, changed outcomes/analyses, poor surrogate outcomes, non-pragmatic studies, unknown harms at the time of licensing, etc). Any rule that deviates 100-1000 fold from reality is unlikely to be a good one.

“If we want to use true posited values of the effect size, we have to consider all possible outcomes for the two trial rule, not just the ones that indicate ‘register’. For the cases that indicate ‘register’, it is a foregone conclusion that we will have 100% success (in terms of decision-making) in the first two cases and 100% failure in the second. What we need to consider also is the situation where it is not the case that two trials are significant.”

You are quite right that the paper is only about incorrect endorsements and not about incorrect failures to endorse. We are quite clear about our goals from the outset (as reflected in the title and abstract), but we agree that for future work it would be interesting to approach the decision rule from a Type II error rate perspective as well.

(4) The choice of priors

“… Be that as it may, I doubt that choosing, ‘a Cauchy distribution with a width of r = √2/2’ as R&I did would stand any serious scrutiny. Bear in mind that these are molecules that have passed a series of in vitro and in vivo pre-clinical screens as well as phase I, IIa and IIb before being put to the test in phase III. However, if R&I were serious about this, they would consider how well the distribution works as a prediction as to what actually happens in phase III and examine some data.”

We may have to agree to disagree on how plausible the Cauchy distribution with a width of r = √2/2 is for these effects. We are less optimistic about the results of phase III trials a-priori, for the reasons we described above. There are however two important points we wish to make. (1) the discrepancy between ‘the default Bayes Factor’ as originally conceived by Jeffreys and Bayes Factors calculated for radically different priors is not nearly as large as one would expect (see e.g., Gronau, Ly, & Wagenmakers, 2017, https://arxiv.org/abs/1704.02479, for a number of examples); (2) the Cauchy prior with a width of r = √2/2 places a lot of weight on an effect size of 0. Changing the prior along the ways you suggest (i.e., with a higher mass on non-null effect sizes) will pull the Bayes Factor more in the direction of support for the null hypothesis for medium to large n, thus amplifying our conclusions. Gronau, Ly, & Wagenmakers, 2017 show with an example how this may happen (for their prior, it holds for n>82). The authors say about this: ‘An intuitive explanation … is provided by the Savage-Dickey density ratio representation of the Bayes factor (Dickey and Lientz, 1970): the Bayes factor in favor of H0 equals the ratio of posterior to prior density for delta under the alternative hypothesis evaluated at the test value delta = 0. When n is small, the posterior for delta under the informed hypothesis is similar to the informed prior whereas the less restrictive default prior will be updated more strongly by the data. Hence, in case H0 is true, the ratio of posterior to prior density evaluated at zero will be larger for the default alternative hypothesis than for the informed alternative hypothesis. However, when n grows large, the data start to overwhelm the prior so that the posterior distributions become more similar. In that case, the ratio of posterior to prior density will be larger for the informed alternative hypothesis since its prior density at zero is smaller than that of the default prior.’

“How far can the prior distribution from which one simulates be from the prior distribution one assumes for inference in order for the simulation to be a) a severe test but b) not totally irrelevant?”

Quite far, we would argue. The prior specifies the researcher’s a-priori belief about the underlying effect size. To say that the prior must be exactly what we simulate from is, in our opinion, to say that researchers should have a crystal ball. For the simulations to be “not totally irrelevant” it is quite important that we do not treat the prior as if we know the generating distribution. If we did, we would not need a posterior or a Bayes factor at all, we are so to say done and can dispense with the data.

Finally, we are not responsible for any earthquakes attributed to John. John does not even have a Twitter account.

Thank you again for your thoughtful post. Kind regards,

Don & John

Don and John:

There are people at regulatory agencies that you can talk to to get a better sense of what actually happens (John you have my email, just email me if you want me to refer you to someone).

> This partly depends on the form in which these trials (if at all) see the light of day.

Its rare that any trials are not pre-discussed with a regulatory agency and tracked from the start.

Anything that wasn’t, if it was considered at all, would likely only be considered as supportive.

When I was in that area, I had almost no concerns about selective reporting of trials at all (a huge change from the published literature).

Keith O’Rourke

Thanks Don and John. I am going to leave a number of these points as matters we agree to disagree on (on the basis of previous email exchanges) but respond to some. (The numbering does not reflect the numbering of your points)

1) First a point of clarification. The purpose of my first diagram was to find the likelihood corresponding to the combination of P-values (for two trials only) that gave least support for H1. As the diagram shows, there are other combinations of P-values in which only one corresponds to ‘significance’ that give more support. As I have argued for at least 20 years (see also the references in reply to Stan Young above) if the FDA is going to require two trials but not allow meta-analysis, then it would be logical to require different trial designs from sponsors. However, there is also an interesting corollary. If the Cochrane Collaboration (CC) is going to allow meta-analysis of identical trials (against which I do not wish to argue) and wishes to match the evidential value of the FDA’s two trials rule, then the z-value the CC should be looking for is about 2.77 or a P-value of 0.0056. (Interestingly, I think we can all think of at least one example where the FDA reacted swiftly because a meta-analysis of harms produced moderate significance against a drug.)

2) (One of the points under your 3.) I have never implied that a one-sided P-value of 1/1600 corresponds to a 1/1600 probability of the null being true nor that it ought to. I have consistently maintained that it is a different system. Furthermore, one must be careful here. To say that this could be the reason that the failure rate is high confuses two matters a) the evidential value of P=0.05 (twice) and b) the consequence of using the rule P <=0.05 (twice). Usually, it is frequentists who are accused of confusing these two things but here I think there is a danger of the reverse confusion. If the prior you adopt for the Bayes factor (BF) reasonably describes frequencies of treatment effects in drug regulation, then two significant results close to P=0.05 will be rather rare (as indeed you found). If, under the circumstance, they disagreed with BFs, then becomes A Bayesian argument for tightening the rule along the lines of 'actually for little cost you could drop the required significance level: you will hardly ever get this case and when you do it will often be a false positive'. The argument is quite different from saying 'this rule produces lots of false positives', For that you need an analysis along the lines of John's 2005 paper (albeit now with different prior distributions).

3) (Again picking up on your 3.) We don't disagree on the value of putting all the trial results together in some sensible way and to the extent that we have evidence that the FDA is ignoring non-significant trials, this is a problem (but my limited interaction with the Agency suggests they don't). But I hardly see in what sense this is an argument for BFs per se.

4) You state "Actual occurrence rates in practice will likely represent a false picture due to publication bias and selectively reported outcomes" I think you are endorsing by implication a common claim of certain members of the evidence based medicine movement that this is a feature of drug regulation, whereas I would maintain it is fairly well-policed by the regulator but hardly at all by the journals. The FDA rarely lets the NEJM decide what it registers. (I know of at least one example where it has reprimanded a leading journal.) However, the point is that whether or not you agree, it is a complete red-herring here. I fail to see how calculating BFs is going to solve selective reporting.

5) Your final point is well taken.

Dear Stephen,

Just one final clarification: of course, in general, type I error rate does not equal ”how many licensed drugs are not really that clinically useful”. However, in the case of very well-powered phase III trials where two of them get p<0.05 and thus get licensed as being (seemingly) useful, it is true that the composite type I error (1/1600) highly approximates the probability that the drugs are not useful, 1-PPV (1-positive predictive value), because as type II error tends towards 0, we get 1-PPV=alpha/(R+alpha). Given that in these trials one assumes (rightly or wrongly) equipoise when they get started, i.e. R=1, we get 1-PPV=alpha/(1+alpha) which means that practically 1-PPV=alpha. This is a very special case and this is why we stated that the theoretical failure rate is 1/1600. Obviously, the real failure rate is 100-1000 times higher than that theoretical failure rate. We still believe that emphasis in the p-values and having two of them be <0.05 facilitates this impressive disconnect from reality by placing less or no attention to prior odds, nature of outcomes and magnitude of the effect sizes (and thus clinical utility), and potential biases. Bayesian approaches would not necessarily eliminate these problems, but it would be far more difficult to silence them, as one explicitly needs to think about them while making calculations in that framework. For similar reasons, we are unsympathetic to meta-analysis using p-values. Meta-analysis of p-values has gained acceptance status only in fields where people don’t care about the magnitude of the effect sizes, e.g. genetic epidemiology. For clinical trials of medical interventions, knowing the effect sizes (and thus the clinical utility), understanding the prior odds and biases, and telling whether the treatment effect is likely to be true rather than false-positive (as opposed to whether the p-value looks nice) are all totally essential.

Kind regards,

Don and John

Dear Don and John,

1) I am not proposing and never have proposed meta-analysis using P-values except as a last resort when one does not have access to the original data (or at least sufficient statistics), which would never be the situation for the FDA. As I explained 20 years ago in my book Statistical Issues in Drug Development, a reason to prefer two trials, each significant to some fixed effects meta-analysis based on the original data, would be that one feared some ‘random gremlin’, so that two trials, each significant provided more protection then the combined information. However, given that the FDA seems to accept pretty- much identical protocols, the argument of protection against the random gremlin is then not relevant.

2) In your paper you stated “We calculated one-sided JZS Bayes factors for the combined data from the total number of trials conducted” My Figure 1 identifies the z statistic that results from the pooled data which corresponds to having two trials that are just significant. I did this in order to illustrate the case of the weakest possible evidence that two out of two just significant trials could provide. I was pointing out that it corresponded to having a single Z statistic of about 2.77.

3) I then suggested in my reply to you that this has an interesting implication for the Cochrane Collaboration if it wishes to match the two out of two rule (I see no signs of CC adopting BFs). In fact, I have also argued that regulators would be right to refuse to accept a meta-analysis as a substitute for two trials each significant if a lower significant level were not targetted.

4) I will make one further remark about your use and defence of your ‘automatic’ Cauchy for the Bayes factor. It all depends very much when you pick the problem up as to what prior you should use. If you were to do your analysis sequentially, start with your prior distribution P0, add the data from one trial D1 calculate the posterior distribution to get P1= P0+D1 and now do the same for your second trial you have, of course, to use as your prior for D2 so that you get P2=P1+D1=P0+D1+D2. This follows, because, of course, to the extent defined by the model, prior and data are exchangeable and as we all know, “today’s posterior becomes tomorrow’s prior”. It follows, therefore, that P1 cannot at all be like your P0. At some point in your Bayesian calculations you have to stop believing in default Cauchy. Of course, it is a potential strength of Bayesian methods of which we are often reminded, that it can combine information from all sources. If you believe any information at all is added by drug research and development prior to phase III, it would be a difficult exercise (since the modelling involved would be much more complicated) but very interesting, to find out what the paleo prior (P-1 or lower) is lurking behind that Phase III P0 (of course the data we have are bedevilled by our ignorance of the counterfactual fate of false negatives). Still, it would be an interesting exercise. If you think your Cauchy would also be appropriate then, then we have a brilliant way to reduce the costs of drug development – proceed straight to phase III. It all seems a long way from the advice in the paper by Rouder et al, which was *Moreover, analysts can and should consider their goals and expectations when specifying priors. Simply put, principled inference is a thoughtful process that cannot be performed by rigid adherence to defaults.” (p235)

I would like to suggest that much disagreement happens because Bayes simple rule (and Bayes factors) does not provide an adequately detailed model on which to base scientific or clinical reasoning and discussion (e.g. by merely assuming statistical independence when updating priors to create posterior probabilities). In clinical reasoning, models based on Bayes EXPANDED rule are needed to reason in a more detailed way with the different ‘factors’ that affect clinical decisions; the same appears to be the case for scientific conclusions. This involves the ‘probability syllogism’ and reasoning by probabilistic elimination (see: http://oxfordmedicine.com/view/10.1093/med/9780199679867.001.0001/med-9780199679867-chapter-13).

In discussions with clinical colleagues and statisticians, the first step is to establish the accuracy of the observations and they way that they are reported. This can be done by going through a checklist that considers poor training, genuine errors, dishonesty, presence of absence of original records, trial data sheets, preregistration of studies, etc and by a process of ‘probabilistic elimination’ hopefully showing that all these sources of methodological inaccuracy in the check-list are improbable and that it is probable that the methods and reported observations are very probably accurate (e.g. with a subjective probability of 0.90). Without such accuracy it would not be possible to repeat an observation let alone estimate the probability of replication.

If the observations are accurate, then the next step is to consider the role of chance on the probability of long-term replication. In scientific studies that involve observed means or proportions, this will be based on statistical sampling theory. For example, a study might be regarded as a sampling from possible large populations with a distribution of prior proportions conditional on some universal set, e.g. (a) with one population proportion being 1million / 100 million nearer to the tail, and (b) another of 150 million / 1000 million nearer the mode. Note that the prior probability of (a) is 1/10th that of (b) (i.e.100million/N to 1,000million/N. If we made 100,000 sampling observations from each of these original populations as a ‘near perfect study’, then the sample from (a) would be1,000/100,1000 = 1% and from (b) it would be 15,000/100,000 = 15%. It is important to note that the sample proportion is not affected at all by the prior probability of each possible original population where the prior probability of (a) was 1/10th, much smaller than (b). i would be interested in everyone’s views about this.

In the scientific study, there will only be one ‘true’ population, so what we have to consider is possible long term sample results from 0/100,000 to 100,000/100, all of which are equally probable as each is based on 100,001 subsets each of 100,000 elements (each element being the result of a single sampling). There is no need to postulate ‘indifference’ – sampling is a special case where each long term sampling possibility is by definition equally probable. The study result e.g. of 15/24 can be regarded as a sample drawn from equally probable long term study results from 0/100,000 to 100,000/100,000. In this case the probability of any ‘true’ result conditional on a sample (e.g. 15/24) will be the same as the inverse likelihood of selecting 15/24 from the true long terms sampling result. For example, on the basis of an observed sample of 15/24, we might estimate that the probability of a long term mean falling between 44% and 80% would be 0.95.

This means that the probability of the observed result or something more extreme conditional on the null hypothesis will be the same as the probability of the null hypothesis or something more extreme conditional on the observed result. The probability of something less extreme will be 1-P. The problem is that the latter will contain true results that are barely more than null. If we only regarded results at least one SEM less extreme than null as scientifically significant, then the probability would be approximately 1-P – 0.16. So, unlike medical situations, the base-rate prior probabilities of the possible outcomes of long term sampling are always equal. The calculation of a posterior probability from a Bayesian prior distribution can instead be regarded as a form of meta-analysis where the subjective data on which the non base rate prior distribution is based is combined with the observed study data.

If the probability that the methods and results were described accurately was 0.9 and the probability was 0.95 that the result of 15/24 would be replicated in the long term between 44% and 80% IF the result was described accurately, then the syllogistic probability that the result would lie between 44% and 80% conditional on the methods as described would be 0.9 × 0.95 = 0.855. Even if the study could probably (at 0.855) be replicated, other factors have to be taken into account to estimate the probability of a true discovery, such as biases that would be repeated even if the study was repeated accurately and replicated in the same setting (e.g. lack of adequate controls or proper randomization). Some might also wish to estimate the probability of replicating the study in different laboratories with different reagents or different populations with different racial mixes for example.

The probability of various underlying hypotheses that explained the findings would have to be considered in the light of other studies. This reasoning would also have to be based on probability syllogisms and reasoning by probabilistic elimination. At each stage, the probability of a ‘true’ discovery would fall. The probability of long-term replication based on the 15/24 data alone (e.g. of 0.95 after 100,000 observations) within some range can therefore be regarded as an upper bound of a ‘true discovery’, the probability of the latter being therefore between 0 and 0.95.

Huw, I certainly agree with you that more complex approaches than given by simple recipes can be necessary when calculating Bayesian posterior distributions. My experience is in statistics of clinical trials not clinical reasoning, where you are an expert, and I am not going to argue with you there. I think that many Bayesians would dislike, however, the suggestion that a new name of Expanded Rule is required, since this would imply that Bayesian inference only covers simple cases. After all, much frequentist inference is taught with the example of simple random samples (which teaching habit, has, I readily concede, some dangerous side-effects) but this does not limit frequentist inference to simple random samples, even though going beyond this has some interesting consequences [1].

Deborah has kindly hosted a couple of blogs of mine on the subject of more complex situations. See [2,3] However, good applied Bayesian biostatisticians deal with much more complex examples than covered in those two blogs and were starting to do so in the pharmaceutical industry more than 30 years ago, even before the MCMC revolution [4]

I am attending the 4th Bayesian, Fiducial and Frequentist inference conference [4] at Harvard starting today (1 May) and am looking forward to learning more about Jack Good, who was a pioneer of thinking hierarchically

References

1 . “…estimation and prediction are not the same except by accident. It is misleading that a standard statistical paradigm,to which textbooks often return,is that of estimating a population mean using simple random sampling. For this purpose, the parameter estimate of the simple model is, indeed, the same as the prediction” Senn, S. J. (2004). Conditional and marginal models: Another view – Comments and rejoinders. Statistical Science, 19(2), 228-238 https://projecteuclid.org/download/pdfview_1/euclid.ss/1105714159

2 Dawid’s selection paradox https://errorstatistics.com/2013/12/03/stephen-senn-dawids-selection-paradox-guest-post/

3 A paradox of prior probabilities https://errorstatistics.com/2012/05/01/stephen-senn-a-paradox-of-prior-probabilities/

4 Racine, A., Grieve, A. P., Fluhler, H., & Smith, A. F. M. (1986). Bayesian Methods in Practice – Experiences in the Pharmaceutical-Industry. Applied Statistics-Journal of the Royal Statistical Society Series C, 35(2), 93-150

I am afraid I garbled a reference. That should have read

“I am attending the 4th Bayesian, Fiducial and Frequentist inference conference [5] …”

Reference

[5] BFF4 http://statistics.fas.harvard.edu/program