2025-6 Leisurely Cruise

The following is the February stop of our leisurely cruise (meeting 6 from my 2020 Seminar at the LSE). There was a guest speaker, Professor David Hand. Slides and videos are below. Ship StatInfasSt may head back to port or continue for an additional stop or two, if there is interest. Although I often say on this blog that the classical notion of power, as defined by Neyman and Pearson, is one of the most misunderstood notions in stat foundations. I did not know, in writing SIST, just how ingrained those misconceptions would become. I’ll write more on this in my next post. (The following is from SIST pp. 354-356, the pages are provided below)

Shpower and Retrospective Power Analysis

It’s unusual to hear books condemn an approach in a hush-hush sort of way without explaining what’s so bad about it. This is the case with something called post hoc power analysis, practiced by some who live on the outskirts of Power Peninsula. Psst, don’t go there. We hear “there’s a sinister side to statistical power, … I’m referring to post hoc power” (Cumming 2012, pp. 340-1), also called observed power and retrospective (retro) power. I will be calling it shpower analysis. It distorts the logic of ordinary power analysis (from insignificant results). The “post hoc” part comes in because it’s based on the observed results. The trouble is that ordinary power analysis is also post-data. The criticisms are often wrongly taken to reject both.

Shpower evaluates power with respect to the hypothesis that the population effect size (discrepancy) equals the observed effect size, for example, that the parameter μ equals the observed mean. In T+ this would be to set μ = x. Conveniently, their examples use variations on test T+. We may define:

The Shpower of test T+: Pr(X > xα; μ = x)

The thinking, presumably, is that, since we don’t know the value of μ, we might use the observed x to estimate it, and then compute power in the usual way, except substituting the observed value. But a moment’s thought shows the problem – at least for the purpose of using power analysis to interpret insignificant results. Why?

Since alternative μ is set equal to the observed x, and x is given as statistically insignificant, we know we are in Case 1 from Section 5.1: the power can never exceed 0.5. In other words, since x < xα, the shpower = POW(T+, μ = x) . But power analytic reasoning is all about finding an alternative against which the test has high capability to have rung the significance bell, were that the true parameter value – high power. Shpower is always “slim” (to echo Neyman) against such alternatives. Unsurprisingly, then, shpower analytical reasoning has been roundly criticized in the literature. But the critics think they’re maligning power analytic reasoning.

Now we know the severe tester insists on using attained power Pr(d(X) > d(x0); μ’) to evaluate severity, but when addressing the criticisms of power analysis, we have to stick to ordinary power:[1]

Ordinary power: POW(μ’): Pr(d(X) > cα; μ’)

Shpower (aka post hoc or retro power): Pr(d(X) > cα; μ = x)

An article by Hoenig and Heisey (2001) (“The Abuse of Power”) calls power analysis abusive. Is it? Aris Spanos and I say no (in a 2002 note on them), but the journal declined to publish it [because the deadline for comments had passed]. Since then their slips have spread like kudzu through the literature.

Howlers of Shpower Analysis

Hoenig and Heisey notice that within the class of insignificant results, the more significant the observed x is, the higher the “observed power” against μ = x, until it reaches 0.5 (when x reaches xα and becomes significant). “That’s backwards!” they howl. It is backwards if “observed power” is defined as shpower. Because, if you were to regard higher shpower as indicating better evidence for the null, you’d be saying the more statistically significant the observed difference (between x and μ0), the more the evidence of the absence of a discrepancy from the null hypothesis μ0. That would contradict the logic of tests.

Two fallacies are being committed here. The first we dealt with in discussing Greenland: namely, supposing that a negative result, with high power against μ1, is evidence for the null rather than merely evidence that μ < μ1.The more serious fallacy is that their “observed power” is shpower. Neither Cohen nor Neyman define power analysis this way. It is concluded that power analysis is paradoxical and inconsistent with -value reasoning. You should really only conclude that shpower analytic reasoning is paradoxical. If you’ve redefined a concept and find that a principle that held with the original concept is contradicted, you should suspect your redefinition. It might have other uses, but there is no warrant to discredit the original notion.

The shpower computation is asking: What’s the probability of getting X > xα under μ = x? We still have that the larger the power (against μ = x), the better x indicates that μ < x – as in ordinary power analysis – it’s just that the indication is never more than 0.5. Other papers and even instructional manuals (Ellis 2010) assume shpower as what retrospective power analysis must mean, and ridicule it because “a nonsignificant result will almost always be associated with low statistical power” (p. 60). Not so. I’m afraid that observed power and retrospective power are all used in the literature to mean shpower. What about my use of severity? Severity will replace the cutoff for rejection with the observed value of the test statistic (i.e., Pr(d(X) > d(x0); μ1)), but not the parameter value μ. You might say, we don’t know the value of μ1. True, but that doesn’t stop us from forming power or severity curves and interpreting results accordingly. Let’s leave shpower and consider criticisms of ordinary power analysis. Again, pointing to Hoenig and Heisey’s article (2001) is ubiquitous.

[1] In deciphering existing discussions on ordinary power analysis, we can suppose that d(x0) happens to be exactly at the cut-off for rejection, in discussing significant results; and just misses the cut-off for discussions on insignificant results in test T+. Then att-power for μ1 equals ordinary power for μ1 .

Reading:

SIST Excursion 5 Tour I (pp. 323-332; 338-344; 346-352),Tour II (pp. 353-6; 361-370), and Farewell Keepsake pp. 436-444

Recommended (if time) What Ever Happened to Bayesian Foundations (Excursion 6 Tour I)

Mayo Memos for Meeting 6:

-Souvenirs Meeting 6: W: The Severity Interpretation of Negative Results (SIN) for Test T+; X: Power and Severity Analysis; Z: Understanding Tribal Warfare

-Selected blogposts on Power

- 05/08/17: How to tell what’s true about power if you’re practicing within the error-statistical tribe

- 12/12/17: How to avoid making mountains out of molehills (using power and severity)

There is also a guest speaker: Professor David Hand:

“Trustworthiness of Statistical Analysis”

_______________________________________________________________________________________________________

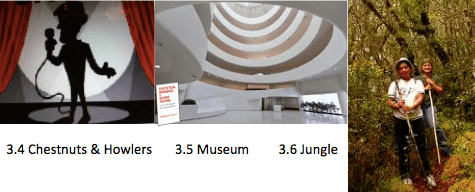

Slides & Video Links for Meeting 6:

Slides: Mayo 2nd Draft slides for 25 June (not beautiful)

Video of Meeting #6: (Viewing Videos in full screen mode helps with buffering issues.)

VIDEO LINK: https://wp.me/abBgTB-mZ

VIDEO LINK to David Hand’s Presentation: https://wp.me/abBgTB-mS

David Hand’s recorded Powerpoint slides: https://wp.me/abBgTB-n4

AUDIO LINK to David Hand’s Presentation & Discussion: https://wp.me/abBgTB-nm

Another link is here.

Please share your thoughts and queries in the comments.